Following the lead of Twitter, Facebook may now also prompt users to double-check whether they have actually read the goddamn article before potentially passing on hoaxes to some of their less discerning friends.

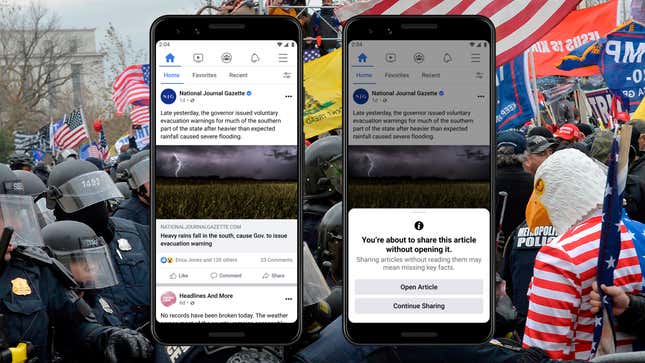

The company’s official Newsroom account tweeted on Monday that if it detects users attempting to share a news article without actually clicking through to open it, it will now prompt them with a popup that says “You’re about to share this article without opening it. Sharing articles without reading them may mean missing key facts.”

This is, at best, a minor speedbump on the disinformation highway, and the odds it will convince your most pigheaded relatives from sharing fake posts claiming that the Pope demanded Joe Biden resign or that unnamed French scientists have discovered masks incubate the novel coronavirus seem pretty low. It’s also not at all clear that pushing more people to read the content shared in places like Facebook’s more toxic Groups will do anything to blunt their desire to spread it further. It’s also somewhat annoying to users who have actually read articles (or in the case of whiny journalists, actually wrote them) without Facebook noticing, although one might point out that’s kind of the feature working as intended.

Twitter first rolled out its version of the feature in June 2020, and it said the prompts were actually effective—raising the odds of someone opening a given link by 40 percent, or 33 percent before actually retweeting. Some users, the percentage of which Twitter didn’t disclose, simply chose not to post at all after seeing a prompt. Of course, it’s possible that the prompts become less effective over time as users adjust their habits to ignore them.

Facebook remains a honeypot for conspiracy theorists and far-right hoaxsters who rely on the site’s algorithms to juice engagement and traffic, and it hosts a sprawling conservative media ecosystem that it’s encouraged by loosening fact-checking standards. So we’ll file this little update in the folder for Facebook updates that came years too late to help much—if it all.