Introduction

An event polling table (S_NQ_EPT) is a way to identify for the Oracle BI Server that one or more physical tables have been updated. Each row that is added to an event table highlights a single update on a single table. The cache system reads from this table, extracts the information from these rows, and flushes the entries based on those information.

After this process is in place and the event table is configured in the Oracle BI repository, cache flushing occurs by itself. Old cache entries are purged automatically at the specified polling intervals, given that ETL is writing records to this polling table correctly. In this article we will see the process of setting up OBIEE (11g) cache purge. This does NOT include the ETL steps need to be taken to load the polling table. That has to be done by the ETL development team.

Polling Table Structure

We can set up an event polling table in each physical database (Development, UAT, PROD etc.) to monitor changes in the database. The event table should be updated every time a table in the database changes – should be taken care by the ETL process. This also depends on the fact that which tables are supposed to be cache maintained (or purge needs to be done). Other tables that do NOT fall in this category are not needed to be present in the rows of this Event Polling Table. The event table should have the structure shown below. The column names for the event table are only suggested; one can use any other logical naming convention. However, the order of the columns has to be the same in the physical layer of the repository (then by alphabetic ascendant order).

| Column Name (by alphabetic ascendant order) |

Data Type | Null-able | Description | Comments |

| Catalog_Name | VARCHAR | Yes | The name of the catalog where the physical table that was updated resides. | Populate the Catalog_Name column only if the event table does not reside in the same database as the physical tables that were updated. Otherwise, set it to the NULL. |

| DB_Name | VARCHAR | Yes | The name of the database where the physical table that was updated resides. | Populate the DB_Name column only if the event table does not reside in the same database as the physical tables that were updated. Otherwise, set it to the NULL. |

| Other | VARCHAR | Yes | Reserved for future modifications. | This column must be set to a NULL value. |

| Schema_Name | VARCHAR | Yes | The name of the schema where the physical table that was updated resides. | Populate the Schema_Name column only if the event table does not reside in the same database as the physical tables being updated. Otherwise, set it to the NULL. |

| Table_Name | VARCHAR | No | The name of the physical table that was updated. | The name has to match the name defined for the table in the Physical layer of the Administration Tool. |

| Update_Time | DATE or TIMESTAMP | No | The time when the update to the event table has happened. This is a unique valued key that changes for each row added to the table. | current timestamp as the default value. |

| Update_Type | INT | No | Specify a value of 1 in the update script to indicate a standard update. | Other values are reserved for future use. |

Event Polling Table Set Up

Create User in the Database:

CREATE user OBIEE_USER IDENTIFIED BY OBIEE_USER;GRANT connect, resource TO OBIEE_USER;

Creating Event polling table:

- Go to the path “<MiddlewareHome>\instances\instance1\bifoundation\OracleBIServerComponent\coreapplication_obis1\schema”.

- Open SAEPT.Oracle file and copy the DDL. Create the table under the BIPlatform schema by running the DDL in the database.

Cache Purging Using the Event Polling Table

To make sure that the Oracle BI Server has write access to the event polling table but not to any other tables in a database, perform the following:

- Create a separate physical database in the Physical layer of the RPD with a privileged connection pool.

- Assign a user to that particular connection pool that has delete privileges.

- Populate the privileged database with the event table.

Importing the physical table:

- Import the table S_NQ_EPT into the Repository from the Database where we have created the table.

- Open the RPD in offline Mode ,Go to File -> import metadata and import the table (S_NQ_EPT) into the Physical Layer.

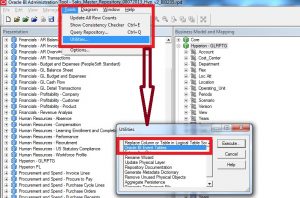

- Go to Tools -> Utilities and select the option Oracle BI Event Tables from the list of options as shown below. Click on Execute.

Guide to Oracle Cloud: 5 Steps to Ensure a Successful Move to the Cloud

Explore key considerations, integrating the cloud with legacy applications and challenges of current cloud implementations.

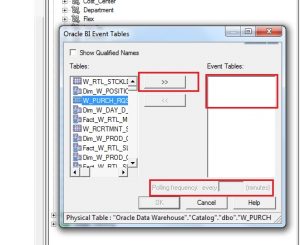

- Select the S_NQ_EPT table as the Event Polling table and select >> button. Set the Polling frequency to some value more than 10 minutes. Ideally it should be 12-24 hours because generally tables are loaded once or twice everyday at most. Click OK.

- After registering this table as an Oracle BI Server event table, one can not make this table cache-able, which is self explanatory.

Validate Cache Flushing

There are a number of ways to implement Event Polling Table loading (update or insert or both) once the daily job of ETL is complete.

1. Editing every Informatica Workflow for each and every warehouse target table to include post-ETL SQL insert statements.

2. Configuration of new tasks to handle inserts once the main ETL load is finished.

3. Triggers in the Oracle database for inserts.

Option 3 is the easiest to configure and should be selected for easy maintenance as well. Option 1 is most tedious as it will impact hundreds of workflows. Option 2 requires a lot more configuration than option 3.

For option 3 do the following:

1. A new table is created to hold the names of the target tables in the Warehouse that need to be flushed by this Event Polling mechanism:

CREATE TABLE S_NQ_EPT_TAB (TAB_NAME VARCHAR2 (200 BYTE) NOT NULL ENABLE);

2. Then, add a trigger to the metadata table W_ETL_REFRESH_DT in DAC. This table’s refresh date gets modified once the ETL completes. The trigger will fire every time the table is modified and if the refresh date is not null then it will insert a new row into the polling table:

CREATE OR REPLACE TRIGGER SCHEMA.S_NQ_EPT_NEWROW

AFTER UPDATE ON DACINFA.W_ETL_REFRESH_DT

FOR EACH ROW

BEGIN

IF :NEW.LAST_REFRESH_DT IS NOT NULL THEN

MERGE INTO SCHEMA.S_NQ_EPT P

USING (SELECT TAB_NAME FROM SCHEMA.S_NQ_EPT_TAB WHERE TAB_NAME = :NEW.TABLE_NAME ) T

ON (P.TABLE_NAME = T.TAB_NAME)

WHEN NOT MATCHED THEN INSERT

(P.UPDATE_TYPE, P.UPDATE_TS, P.DB_NAME, P.CATALOG_NAME,

P.SCHEMA_NAME, P.TABLE_NAME, P.OTHER_RESERVED)

VALUES (1, SYSTIMESTAMP, ‘Oracle Data Warehouse’, ‘Catalog’, ‘dbo’, T.TAB_NAME, NULL);

END IF;

END;

Testing Cache Purging

Set Event Polling Frequency to a small value like 15 minutes. Now to validate the mechanism by filling the values into cache Manager, create a simple report with two or three columns in the OBIEE Analytics Answers. Run the Report and cache manager will store the query run in the back end. To purge this cache do a manual insert for testing purpose:

INSERT INTO S_NQ_EPT (update_type,update_ts,db_name,catalog_name,schema_name,table_name,other_reserved)

VALUES (1, SYSTIMESTAMP, ‘Oracle Data Warehouse’, ‘Catalog’, ‘dbo’, ‘Table Name which is used by the query’, NULL);

Wait the polling interval frequency. The cache entry from the cache manager WILL BE deleted. Once done confirm this from the NQQuery.log file.

Point to Note

The event table is populated by inserting rows into it each time that a table is updated – so by ETL. The process has been explained above. If the table is not updated properly after each load Oracle BI server would NOT take any action on deleting the cache as it would think that the event polling table has been updated properly and no action needs to be taken. So correct update made to the event polling table is the defining factor for the mechanism to work.

Troubleshooting/Debugging

One can find the NQS server and NQS query logs in the following location:

ORACLE_INSTANCE/diagnostics/logs/OracleBIServerComponent/coreapplication_obisn