In addition to helping police arrest the wrong person or monitor how often you visit the Gap, facial recognition is increasingly used by companies as a routine security procedure: it’s a way to unlock your phone or log into social media, for example. This practice comes with an exchange of privacy for the promise of comfort and security but, according to a recent study, that promise is basically bullshit.

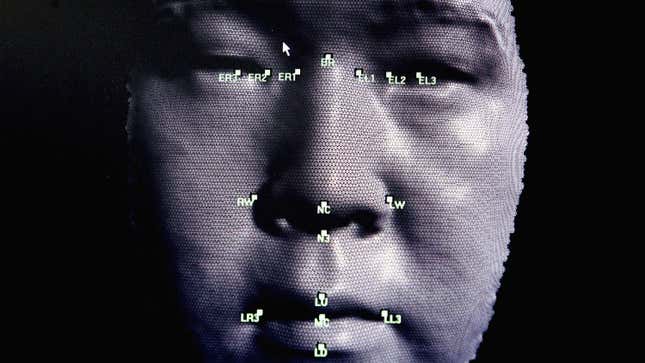

Indeed, computer scientists at Tel Aviv University in Israel say they have discovered a way to bypass a large percentage of facial recognition systems by basically faking your face. The team calls this method the “master face” (like a “master key,” harhar), which uses artificial intelligence technologies to create a facial template—one that can consistently juke and unlock identity verification systems.

“Our results imply that face-based authentication is extremely vulnerable, even if there is no information on the target identity,” researchers write in their study. “In order to provide a more secure solution for face recognition systems, anti-spoofing methods are usually applied. Our method might be combined with additional existing methods to bypass such defenses,” they add.

According to the study, the vulnerability being exploited here is the fact that facial recognition systems use broad sets of markers to identify specific individuals. By creating facial templates that match many of those markers, a sort of omni-face can be created that is capable of fooling a high percentage of security systems. In essence, the attack is successful because it generates “faces that are similar to a large portion of the population.”

This face-of-all-faces is created by inputting a specific algorithm into the StyleGAN, a widely used “generative model” of artificial intelligence tech that creates digital images of human faces that aren’t real. The team tested their face imprint on a large, open-source repository of 13,000 facial images operated by the University of Massachusetts and claim that it could unlock “more than 20% of the identities” within the database. Other tests showed even higher rates of success.

Furthermore, the researchers write that the face construct could hypothetically be paired with deepfake technologies, which will “animate” it, thus fooling “liveness detection methods” that are designed to assess whether a subject is living or not.

The real-world applications of this zany hack are a little hard to imagine—though you can still imagine them: a malcontented spy armed with this tech covertly swipes your phone, then applies the omni-face-thing to bypass device security and nab all your data. Far fetched? Yes. Still weirdly plausible in a Mr. Robot sort of way? Also yes. Meanwhile, online accounts that use face recognition for logins would be even more vulnerable.

While the jury’s probably still out over the veracity of all of this study’s claims, you’d be safe to add it to the growing body of literature that suggests facial recognition is bad news for everybody except cops and large corporations. While vendors swear by their tech, multiple studies have shown that most of these products are simply not ready for prime time. The fact that these tools can be so easily fooled and don’t even work half the time might just be one more reason to ditch them altogether.