Steer Between Debt And Delay With Platform Engineering

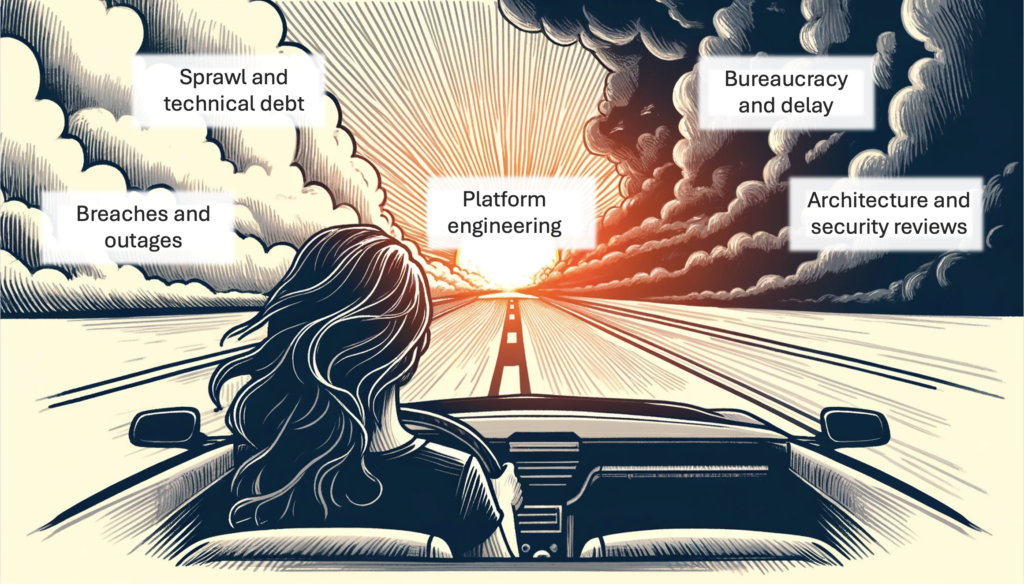

In Greek mythology, Scylla and Charybdis represented the problem of navigating between two hazards: deadly monster on one side, fearsome whirlpool on the other. Modern digital and IT professionals encounter a similar dilemma: Technical debt and sprawl make IT portfolios insecure and unsustainable, but processes and controls intended to minimize technical debt and sprawl are themselves too often value-destroying.

Often based on lengthy checklists, these reviews have been the bane of application and product teams for many years … endless inquiries and forms to fill out, ending too often with seemingly arbitrary decisions calling for significant alterations to architecture and design, with resulting delays in deploying value to customers and end users. The result has been years of low-intensity conflict in IT organizations and far too much waste and rework.

How did we get here?

In the beginning, there was the mainframe. This was a highly constrained platform. You could have any kind of database as long as it was Db2 (or IMS, for you real old-timers out there). Authentication, authorization, transactional integrity, scaling, scheduling, logging, charge-back, data persistence — many services were available, most of the hard questions were already settled, and developers just sat down and wrote their COBOL applications.

Then, distributed computing came. Every project was custom-engineered. I entered the workforce at this time, and one of my earlier positions was with Andersen Consulting (later Accenture), where we were building PeopleSoft ERP solutions. Every project had a distinct technical design. Would it be Sun or Compaq or HP servers? HP-UX or Solaris? EMC or Hitachi storage? Oracle or Db2? Sales reps for the vendors would get involved, and the decision-making was messy.

Then I moved into the enterprise world, where we were just as often building our own apps. All of the infrastructure decisions still had to be made — the infrastructure group might have opinions, but if your project was big enough or the CTO liked you, you could pretty much buy whatever stack you wanted — and on top of that, you had to solve many more problems. What was your security approach? Create your own little list of users and passwords in your app (very common back in the day)? What about backup? Monitoring and logging? Resilience? Scaling? Firewall? You had to figure all of that out.

So with the custom engineering of technical stacks for every distributed application, fundamental architecture wheels needed to be reinvented every single time. Add in project, and, later, product team autonomy, and you had a recipe for tremendous variation and sprawl. (Much is said about the differences between project and product management, but speaking from experience, both styles often share a fierce autonomy and disdain for centralized standards.)

Enter enterprise architecture (EA) and its detractors. While EA pros often hear cries of “ivory tower,” “out of touch,” and so forth, such criticisms overlook the fundamental point: Enterprises put architecture in place to deliver business value, such as the value of controlling the risks that emerge from technical debt and sprawl — security breaches, outages, unsustainable cost structures, and so forth. And so the EA team started to try to control the morass that distributed computing had gotten itself into through standardizing product choices (Oracle and SQL Server are OK, but let’s sunset Informix) and reviewing design choices submitted by project (later product) teams.

The EA reviews started to encompass a wider and wider array of questions. Lengthy intake forms and checklists became typical, covering everything from application design patterns to persistence approaches to monitoring to backup and more. The notorious “Architecture Review Board” became a requirement for all projects. Review cycles became more protracted and painful, and more than a few business organizations got fed up and just went shadow, at least until their shadow systems failed, at which point central IT would be pressured to take over the mess. Agile teams advocating for autonomy fought the EA team to a standstill, but neither side could “win” because both were and are needed.

The solution started to emerge from the agile and DevOps communities themselves. A key moment was the IT Revolution Press publication of “Team Topologies” by Matthew Skelton and Manuel Pais (disclosure: I was a developmental reviewer on it). Their discussion of team cognitive load, and recognition of the need for platform teams as well as stream-aligned teams, was a watershed moment.

What is a platform? Lately, I simply define it as a product that supports other products. In the context of digital systems, it represents a standardized offering “marketed” to teams that are creating products for end consumers, whether in the open market or internal to a large organization.

Platforms reduce cognitive load through standardization and automation. They represent “paved roads” enabling delivery at speed, compared to the “gravel roads” of building and securing your own stack from scratch. They are opinionated and reduce your choices just like the mainframe, but with a budget code, your developers can be developing software with confidence very quickly, and guess what? In a properly executed platform strategy, major architecture and security checks have happened. That 50-item checklist diminishes by 90%. (There still may be concerns about master data management and a few other topics).

I am talking to many EA organizations that are aligning closely with the platform engineering team and ensuring that as many as possible of the traditional EA checks are either baked into the platform architecture or executed as part of automated QA in the associated DevOps pipeline (I believe that every major platform should also include the automated mechanisms by which code is developed, built, and deployed). Automated testing, static and dynamic analysis, SBOM checks, and more can all be operationalized in this way.

There are other benefits to focusing on fewer platforms: allowing for smoother transition of staff between teams, concentrating on fewer vendors and getting better leverage, minimizing your attack surface, and more effective incident response because your staff has a deeper knowledge in the chosen platforms.

There is no free lunch. A platform constrains your options. But we still know how to build stacks from scratch, as well, and we’ll continue to do that in emergent areas such as generative AI. But sooner or later, building any stack becomes “undifferentiated heavy lifting,” with at best an indirect connection to real end consumer value, and we’ll platformize it.

It’s been a long journey, from the mainframe through the bewildering variety of distributed options and now back to something that again is more standardized. After all, the mainframe was and is nothing more than the original opinionated platform. At least nowadays, you’ll have a few more choices.

For more information, see my report, Accelerate With Platform Engineering.