Today we're taking a deep dive into Intel's XeSS technology to see whether it's worth using on Nvidia and AMD hardware. Upscaling technologies are a hot battle right now and continue to form the cornerstone of GPU vendor's feature stack, with Intel now throwing their hat into the ring with XeSS to compete directly with Nvidia's DLSS and AMD's FSR.

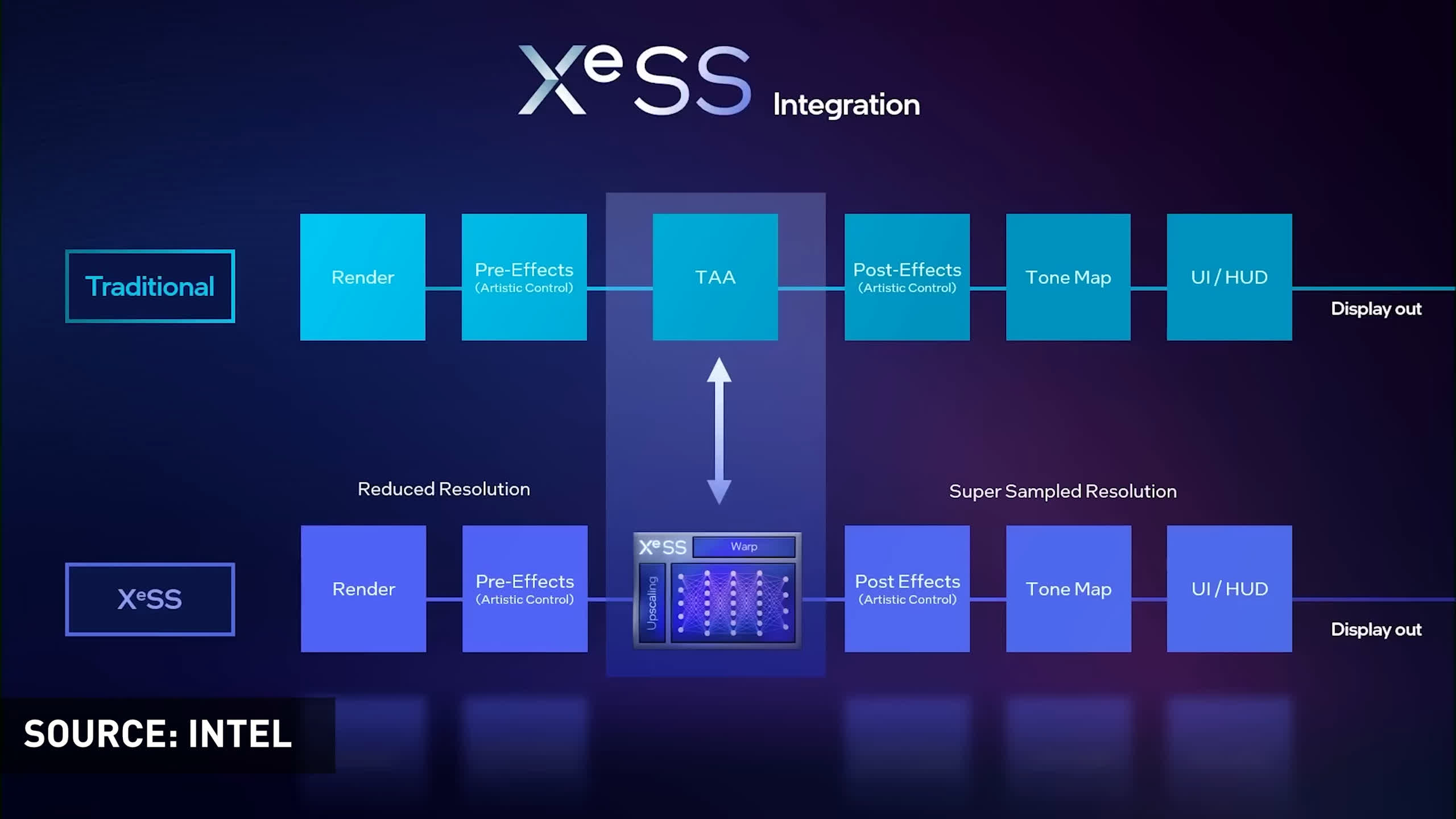

XeSS, or Xe Super Sampling, is similar in principle to DLSS and FSR. It's a temporal upscaling algorithm that is designed to run games at a lower render resolution before upscaling them to your display's native resolution, improving performance with the goal of just a minimal loss to quality.

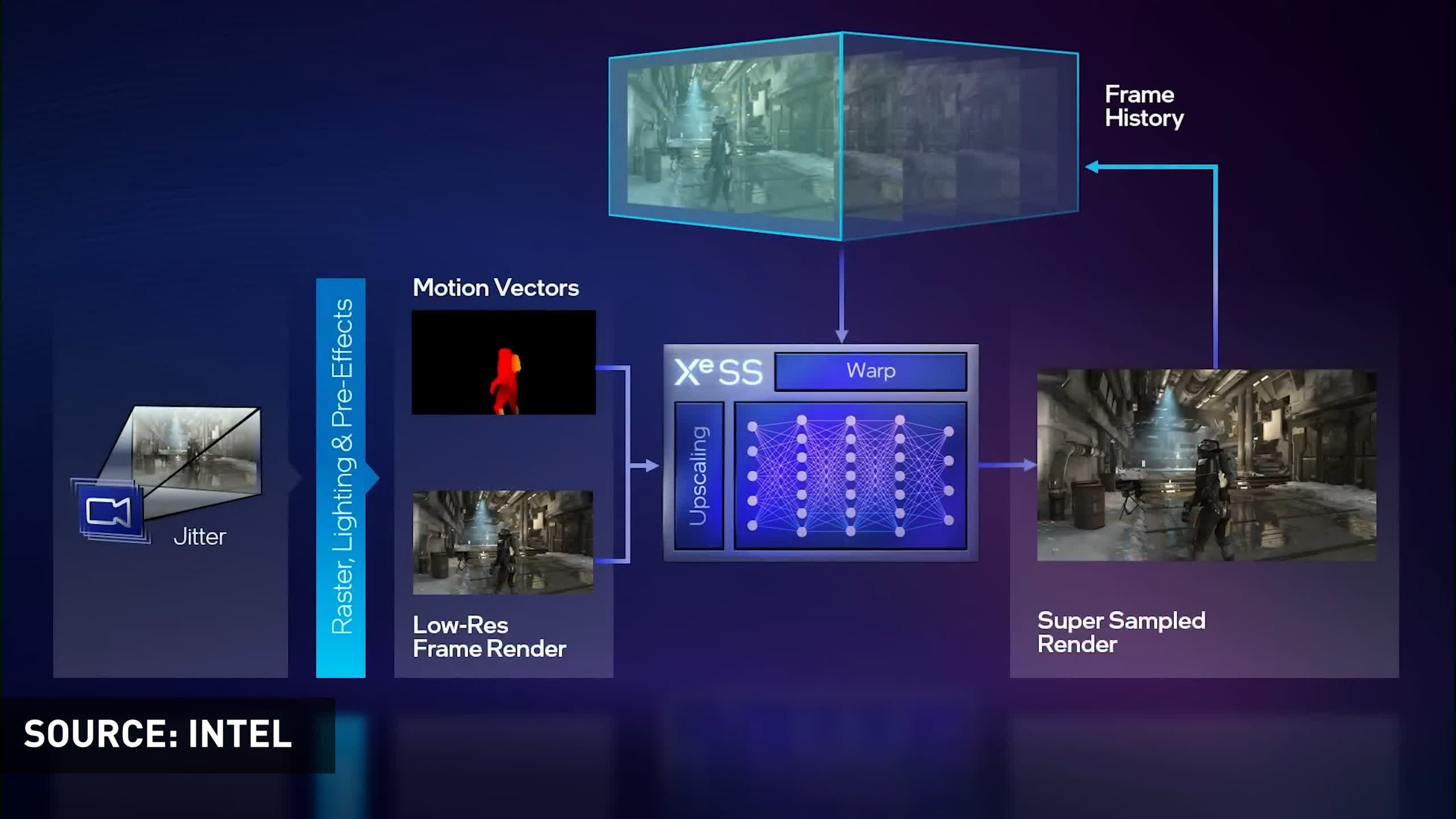

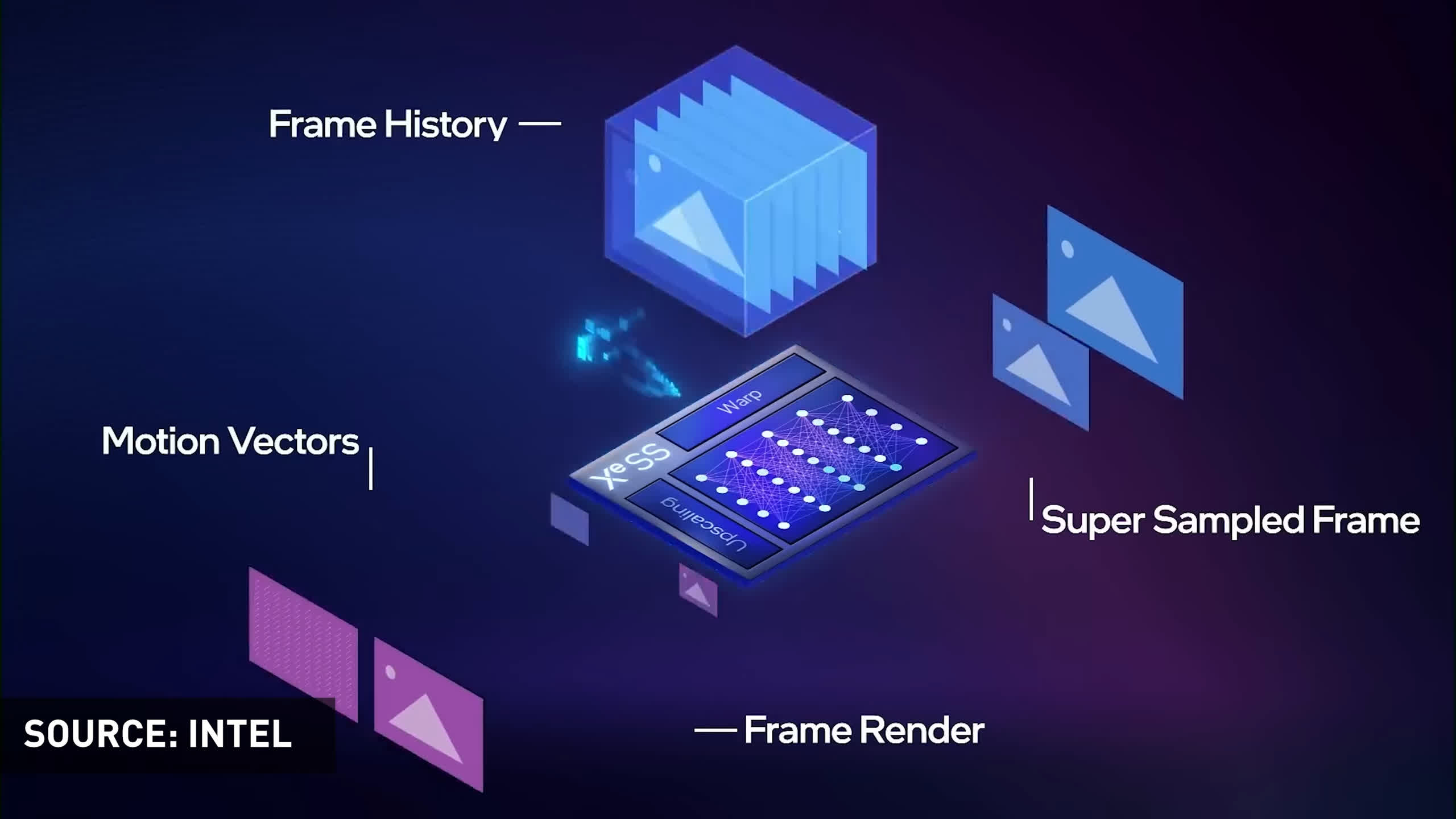

The keyword in the algorithm being "temporal" means that XeSS uses data from the current frame as well as past frames to reconstruct the image, which gives the algorithm more information to use at the cost of needing to be integrated into the game engine itself.

The way XeSS works is more similar to DLSS than FSR as Intel claims it's using AI to enhance XeSS' reconstruction abilities. XeSS uses what Intel says is a "neural network trained to deliver high performance and exceptional quality." However unlike DLSS, which is designed to run its neural network on Nvidia's Tensor cores only, Intel is not locking down XeSS to Arc GPU hardware. Instead, XeSS features multiple components and pipelines that allows it to run on Intel GPUs as well as GPUs from AMD and Nvidia.

On Intel GPUs, XeSS is said to boost performance and possibly image quality through optimizations for Arc's XMX hardware block; on other GPUs the tech uses a fallback to DP4a and other instructions. But the goal is basically to offer an open AI-based temporal reconstruction algorithm that works on all GPUs, a direct competitor to FSR's non-AI-based approach that also supports all GPU hardware.

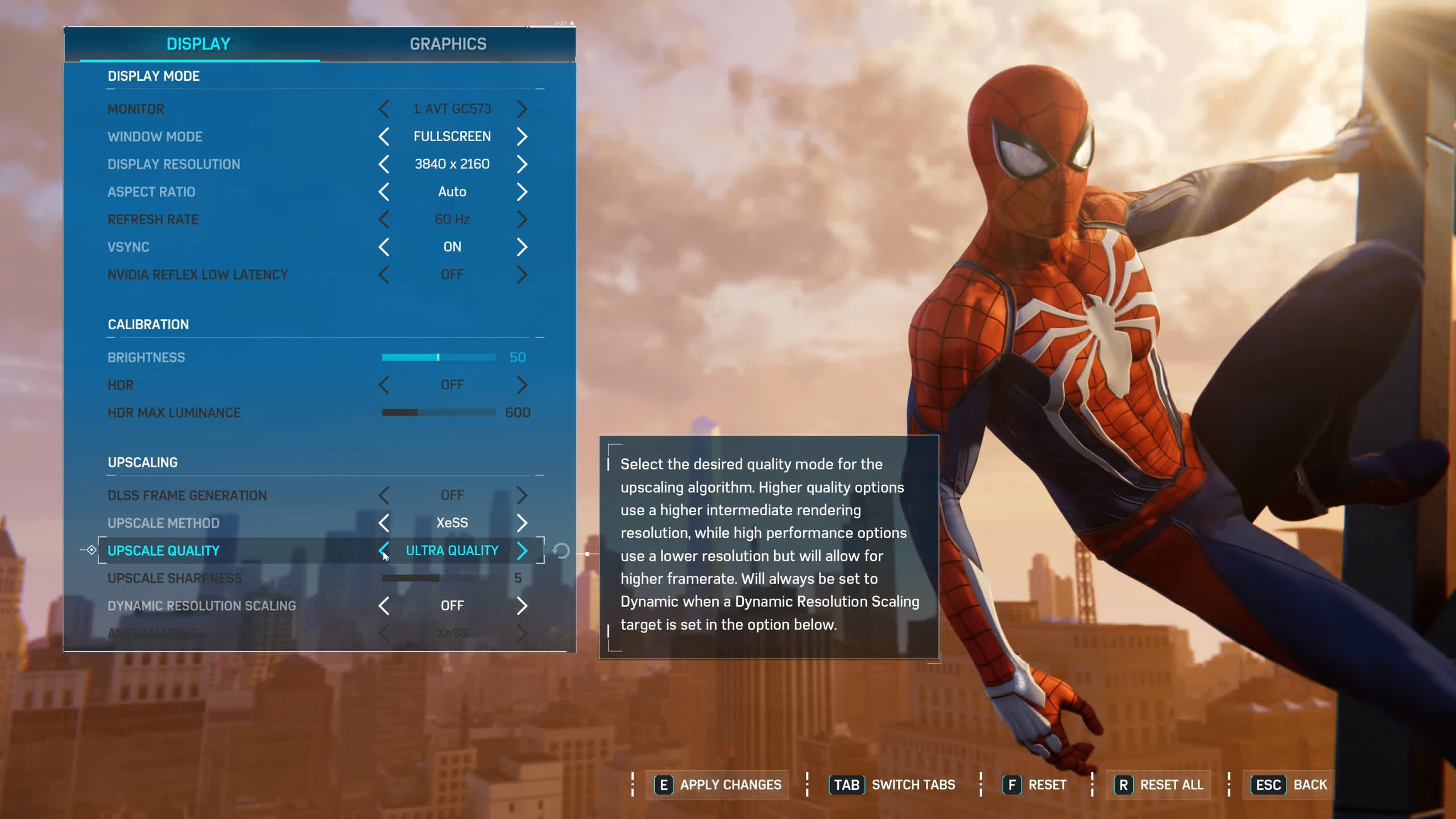

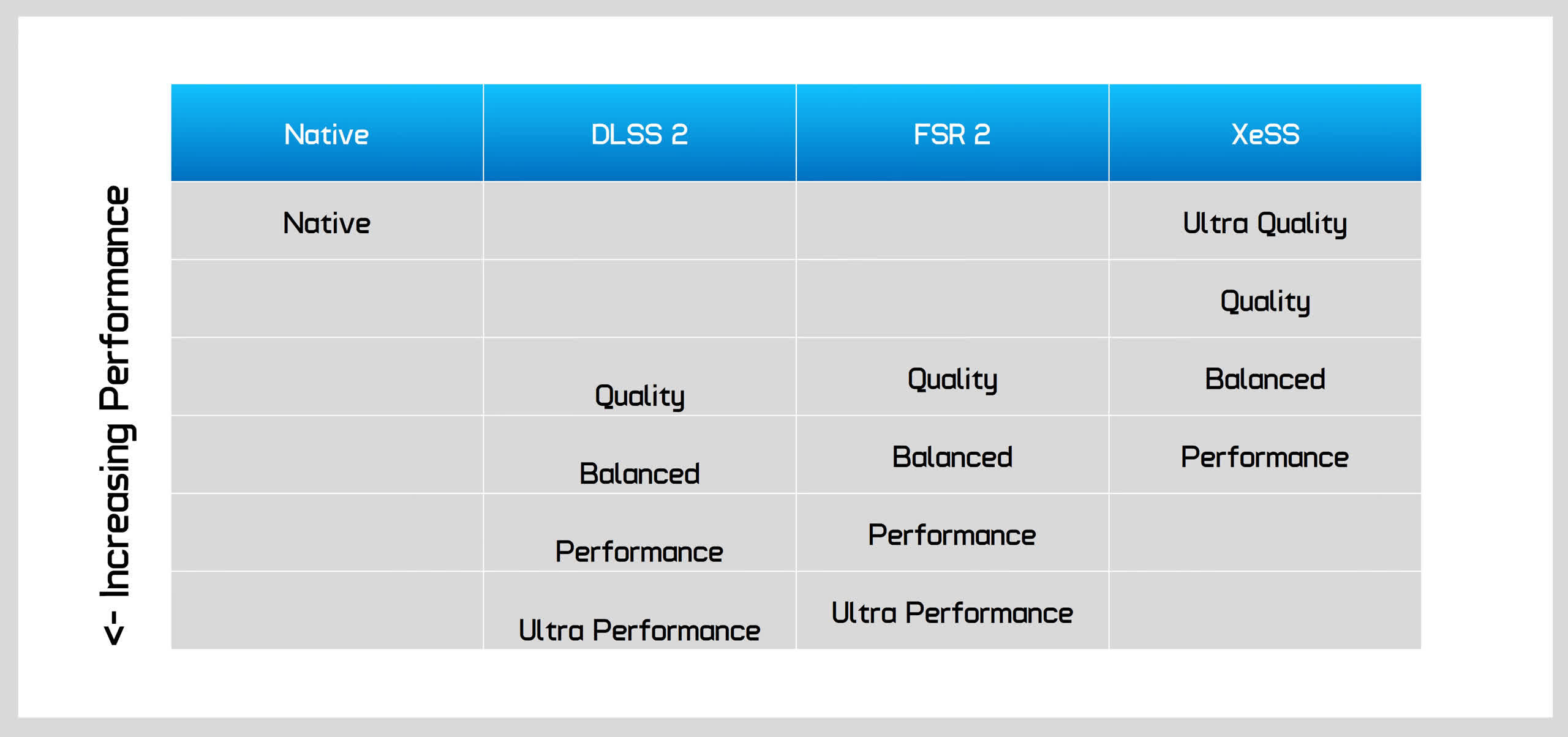

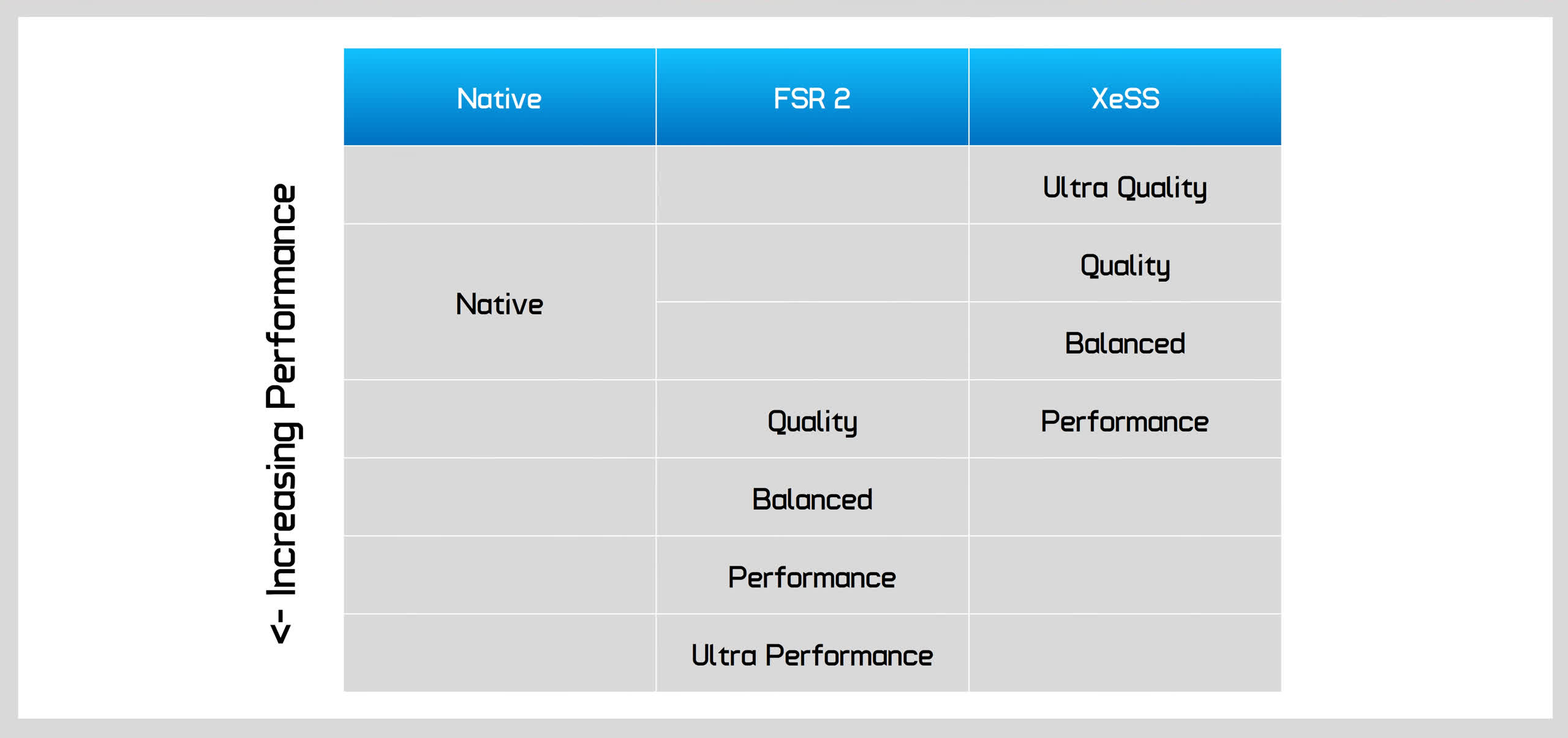

XeSS is integrated into games just like DLSS and FSR, and it offers similar quality options that conform to the current 'standard' the other vendors are using. There's a Quality mode using a 1.5x scale factor, a Balanced mode at 1.7x scaling, and a Performance mode at 2.0x. This means that when targeting a final 4K resolution – XeSS like DLSS and FSR – will actually render the game at 1080p using the Performance setting. In addition to this, Intel offers an Ultra Quality mode with a 1.3x scale factor, something not typically seen from DLSS and FSR implementations. Intel have also discussed an Ultra Performance mode option though this wasn't available in the games we tested.

Test Conditions

For today's testing we first want to explore how XeSS performs. This will set the baseline for visual comparisons, so we can match up the relevant XeSS setting to DLSS and FSR, all providing the same performance boost. For this article, we wanted to focus on GPU hardware gamers might actually own, in other words Nvidia and AMD GPUs, before looking at Intel hardware in a future feature.

There's already so much to go over just looking at XeSS on Nvidia and AMD that any potential improvements specific to Intel really should be left to its own investigation.

All testing today was conducted on a Ryzen 9 5950X test system with 32GB of DDR4-3200 memory. Later in the visual quality section you'll see 4K captures taken on a GeForce RTX 3080 to ensure consistent 60 FPS across all of the samples, but for the performance section we wanted to use hardware that's more limited and benefits the most from upscaling technologies at multiple resolutions. After all, we can't benchmark a wide variety of graphics cards in these sorts of articles as testing even one GPU at three resolutions with up to 11 quality settings is quite time consuming.

So we've chosen to use the GeForce RTX 3060 to represent Team Nvidia, and the Radeon RX 6650 XT on Team AMD. Both are current generation mid-range mainstream options. Latest drivers and game versions were used, so let's get benchmarking.

Hitman 3 Performance

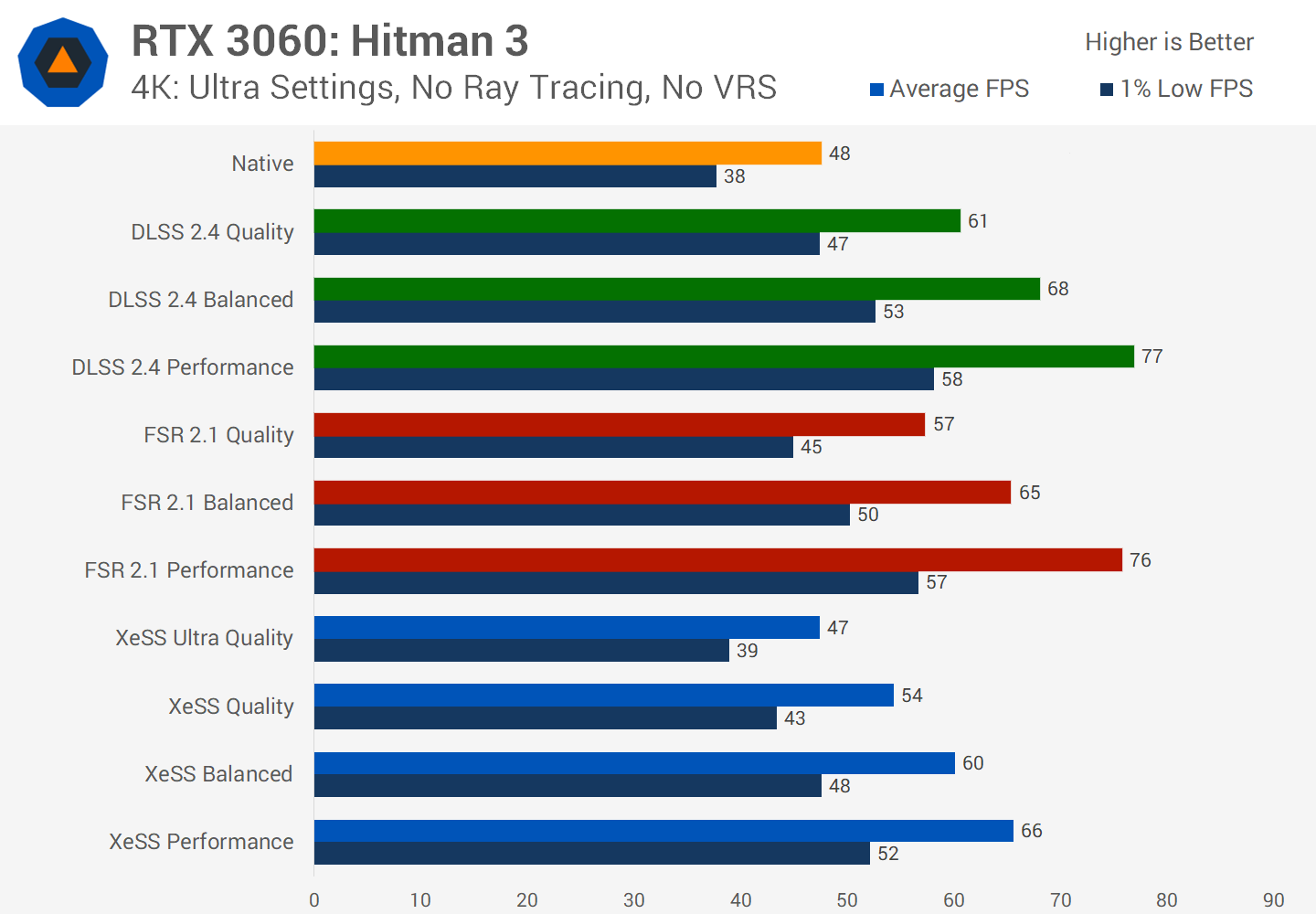

Let's start off with Hitman 3 using ultra settings at 4K but no ray tracing on the RTX 3060. Already the results are quite interesting. Intel's XeSS Ultra Quality mode offers basically the same performance as native rendering, despite using a 1.3x scale factor. It seems that the advantage of rendering at a lower resolution is eaten up entirely by the overhead of the XeSS upscaler at this resolution and quality setting.

The next step down in quality is also interesting. XeSS Quality runs slower than both DLSS 2 Quality and FSR 2 Quality. On the DLSS side, it's actually the XeSS Balanced mode that matches DLSS Quality in performance, while on the FSR side, FSR Quality sits between XeSS Quality and Balanced.

Then for the XeSS Performance mode, we only see a performance uplift equivalent to DLSS or FSR Balanced. XeSS Performance provided a 38% uplift over native rendering, whereas DLSS Performance gave a 60% uplift, and FSR Performance a 58% uplift.

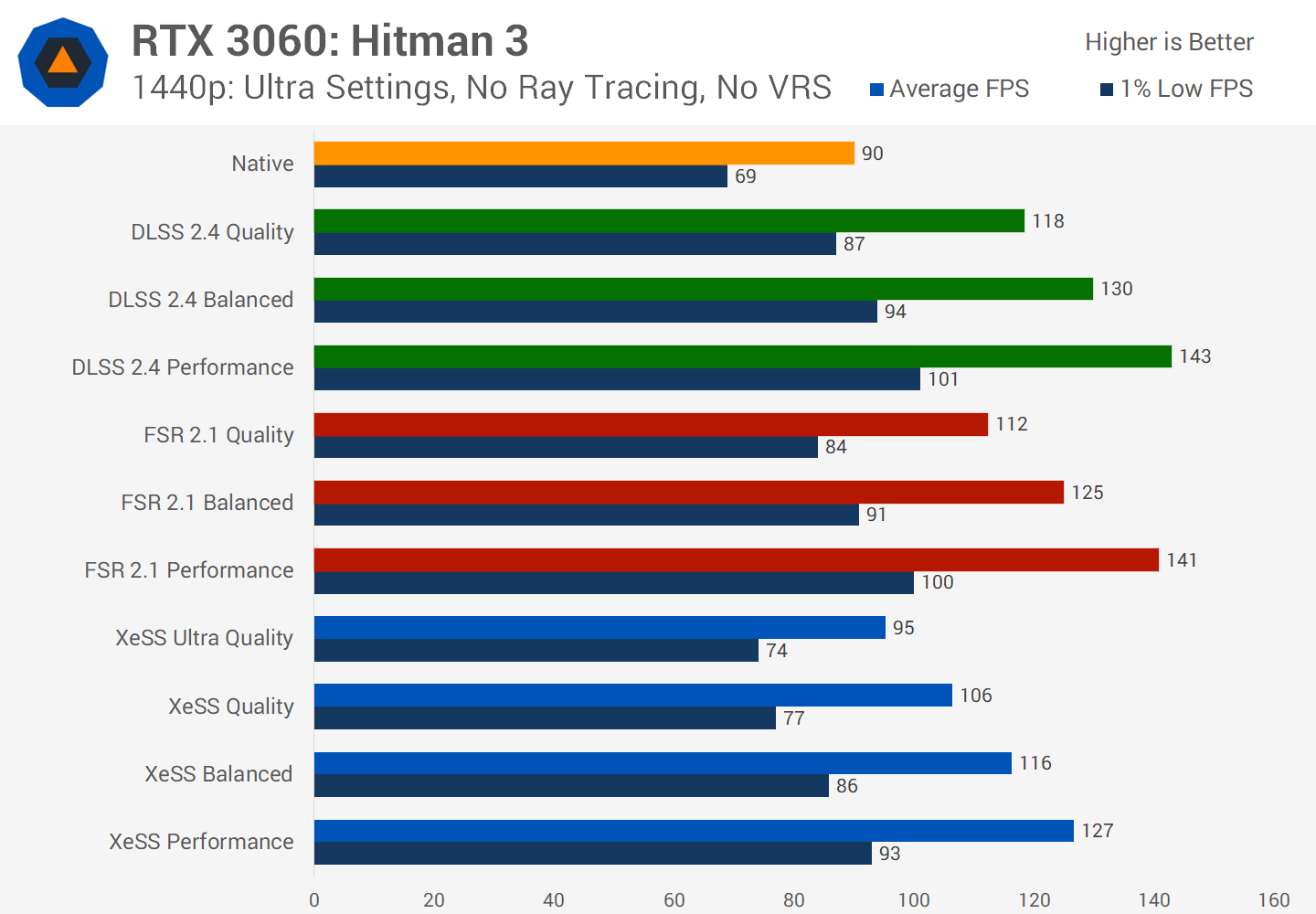

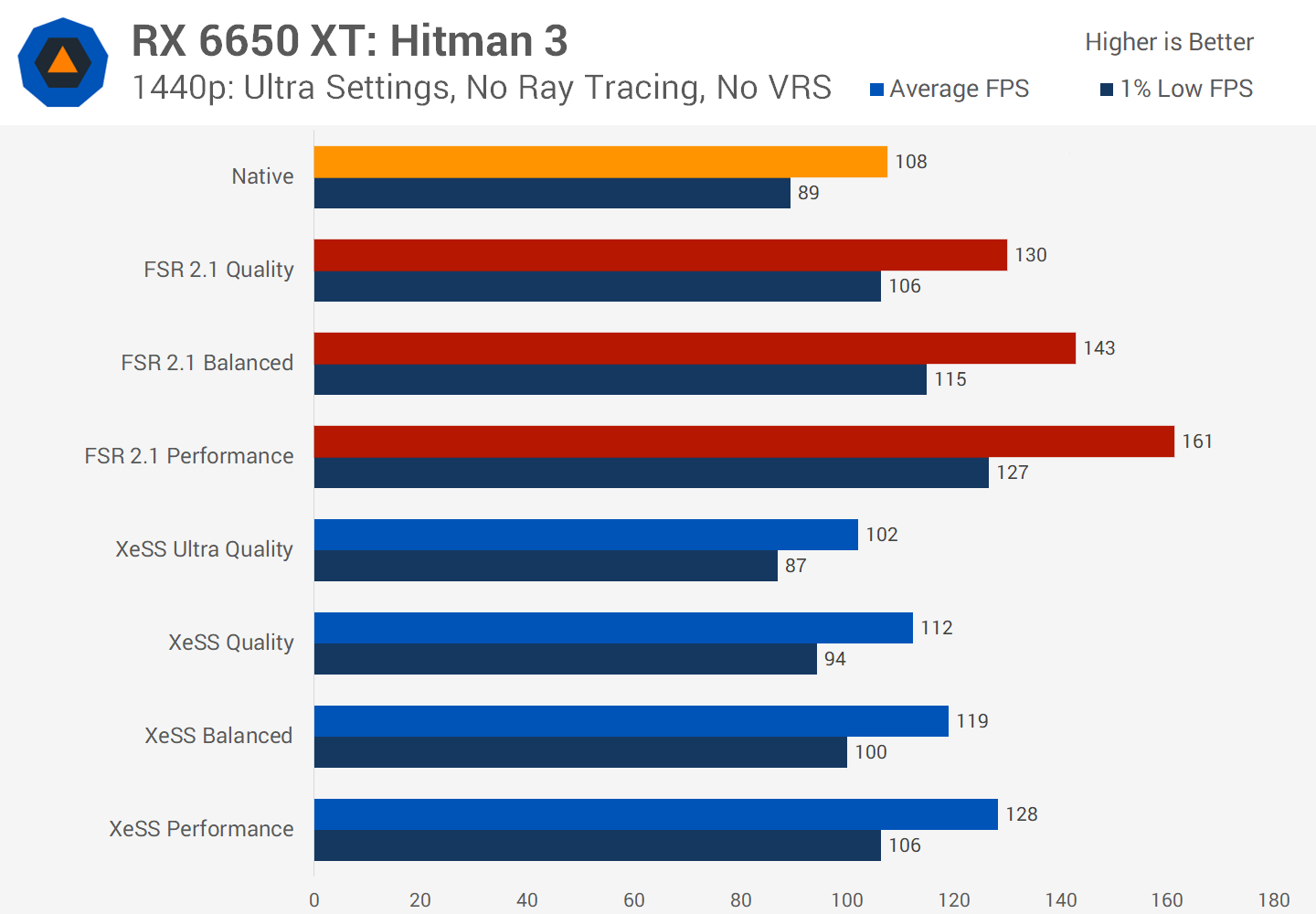

At 1440p it's a similar situation in Hitman 3. The closest XeSS setting to DLSS and FSR Quality modes was the Balanced option, and the Performance mode only matched that of DLSS/FSR Balanced, with DLSS performing the best of the three upscaling techniques. At least with this resolution, XeSS Ultra Quality did provide a small 6 percent performance uplift over native rendering.

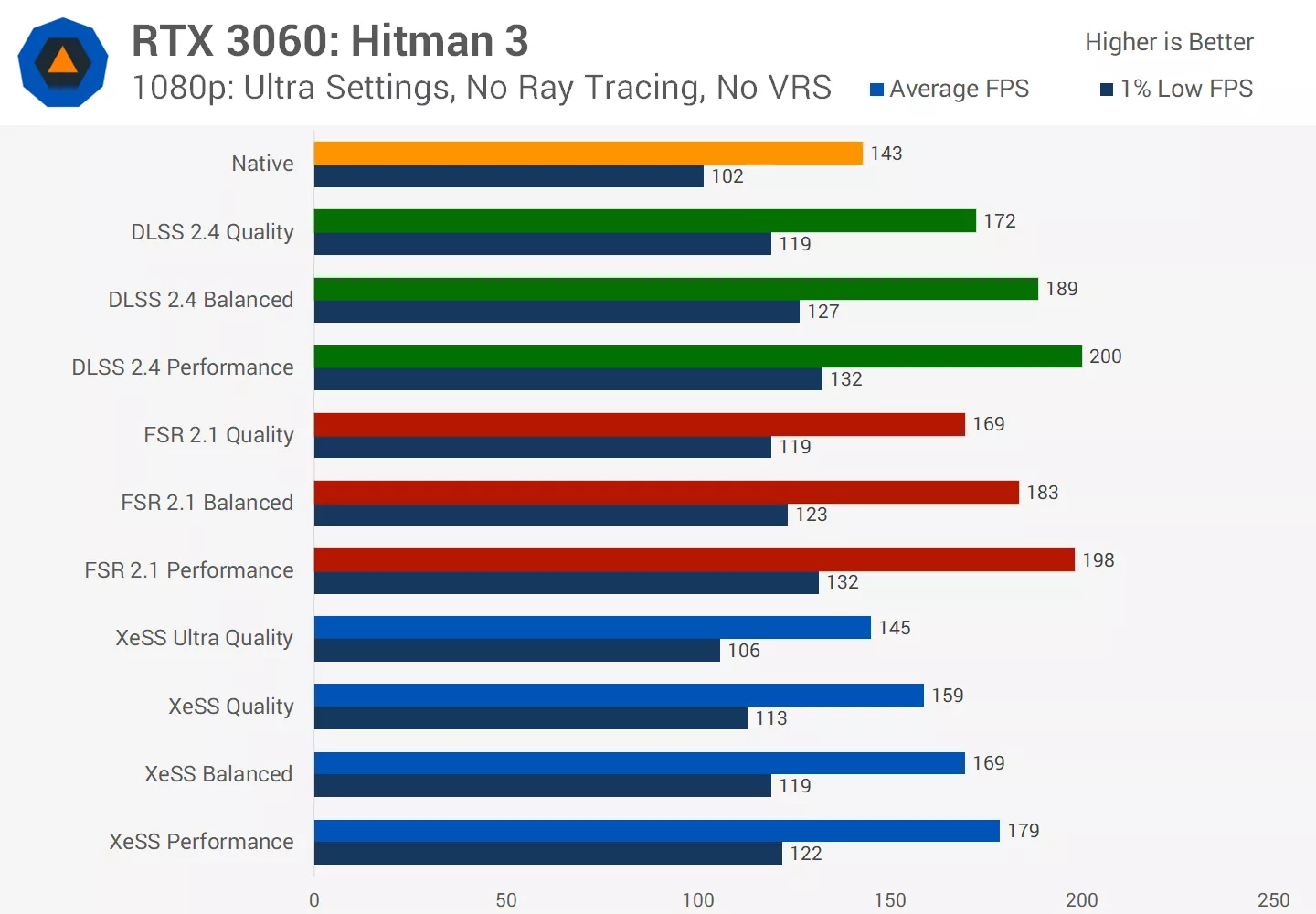

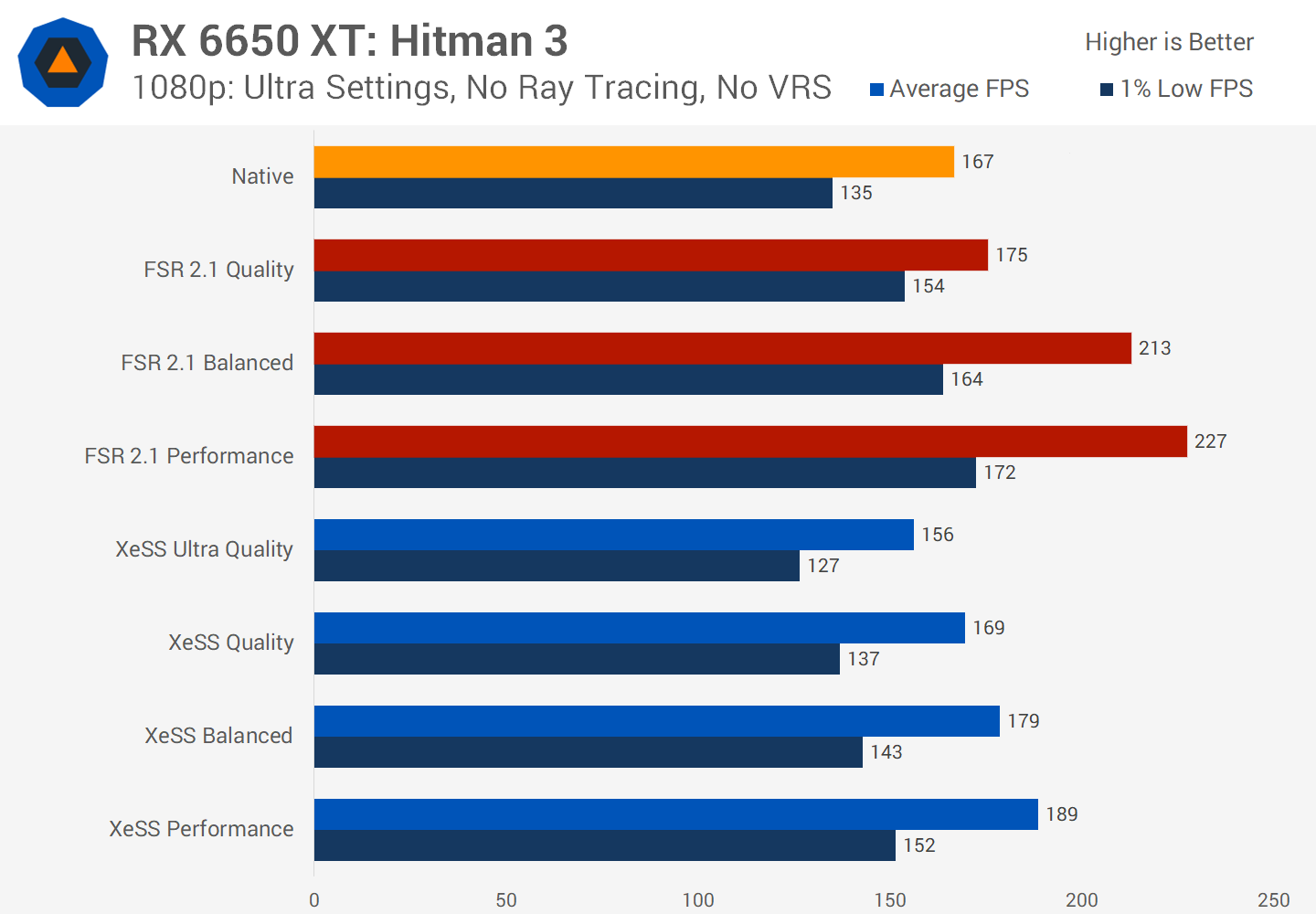

At 1080p, similar results. DLSS/FSR Quality matches up with XeSS Balanced, DLSS/FSR Balanced match up with XeSS Performance. We typically don't recommend gamers use upscaling at 1080p and Hitman 3 is no exception with the RTX 3060, as the game at least in this benchmark is already running at over 140 FPS natively.

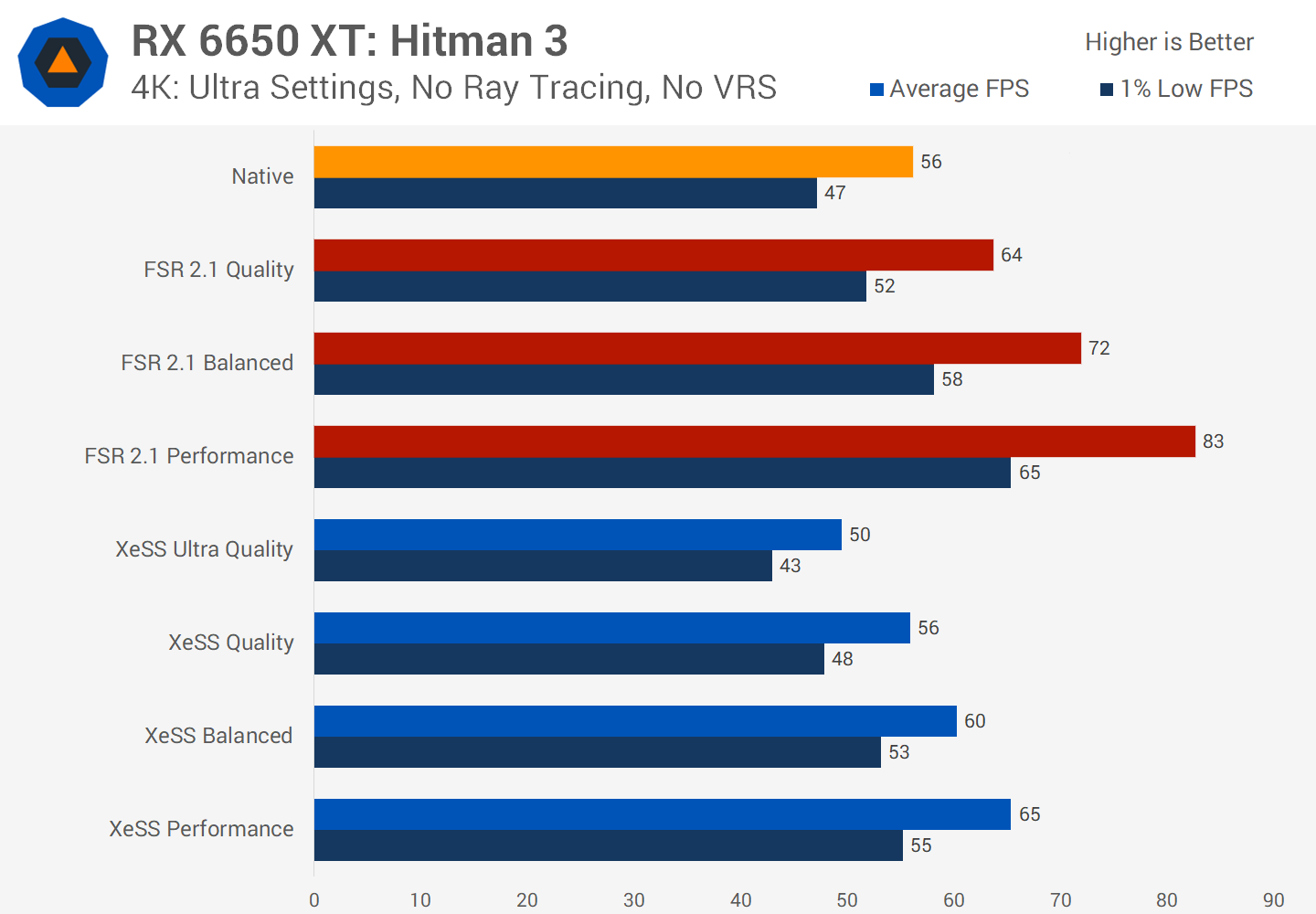

The results are surprisingly different when we move over to AMD's Radeon RX 6650 XT. XeSS performs categorically worse on RDNA2 hardware than it does on Ampere.

When using the XeSS Ultra Quality setting we see a performance regression at 4K compared to native rendering, and now only the Quality setting is able to match the performance of native. This causes big implications for the comparison between XeSS and FSR: we needed to turn XeSS all the way down to the Performance mode to match the FPS of FSR running using the Quality setting. Ouch.

This also holds true at 1440p. XeSS Quality runs a little better than native here but it's not a great showing compared to FSR, given that FSR Quality offers a 20% boost in performance over native rendering, but XeSS Quality only manages a 4% boost. We see the same behavior at 1080p, where the Ultra Quality mode reduces performance and the Quality setting is only a minor bump.

Spider-Man Remastered Performance

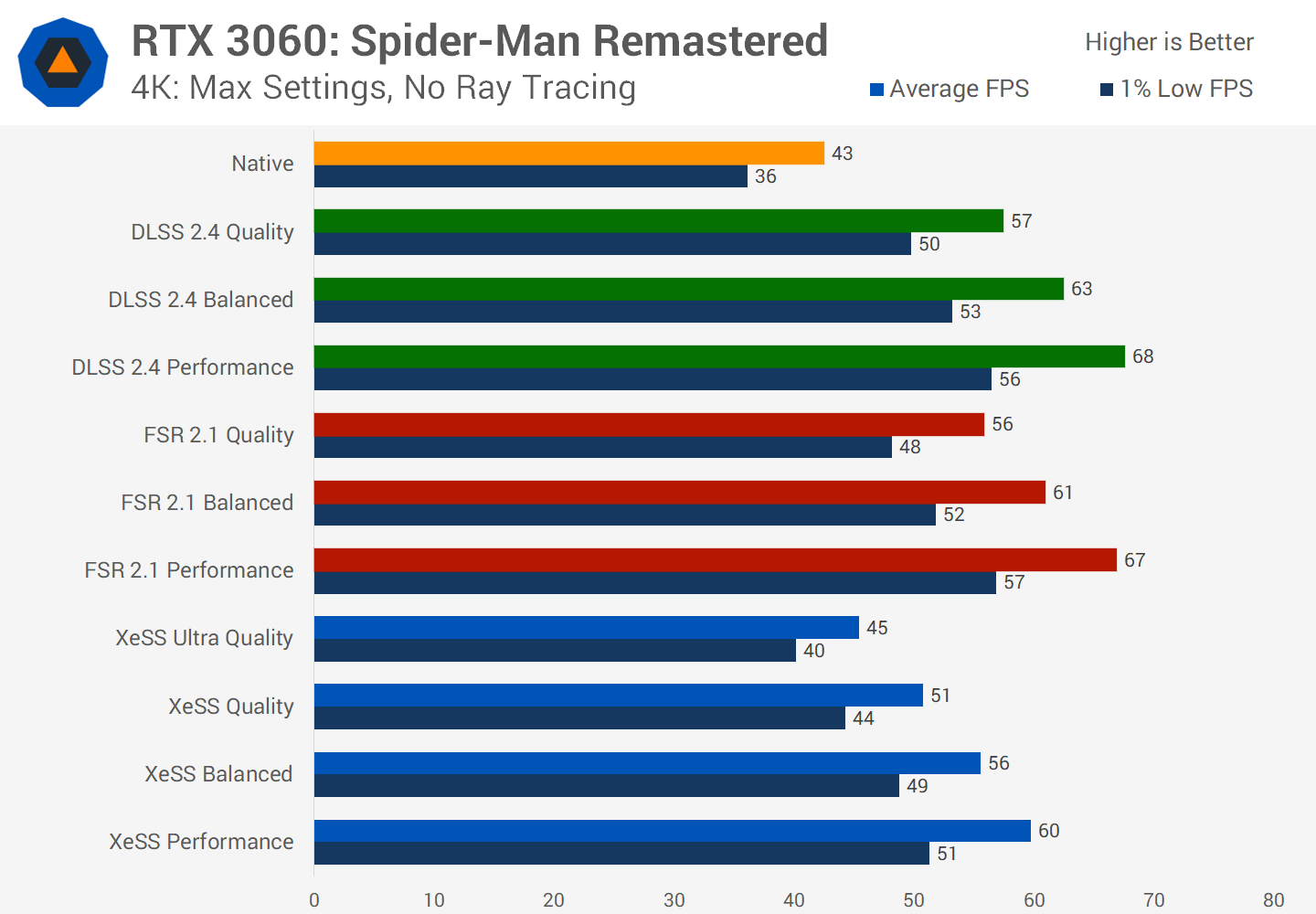

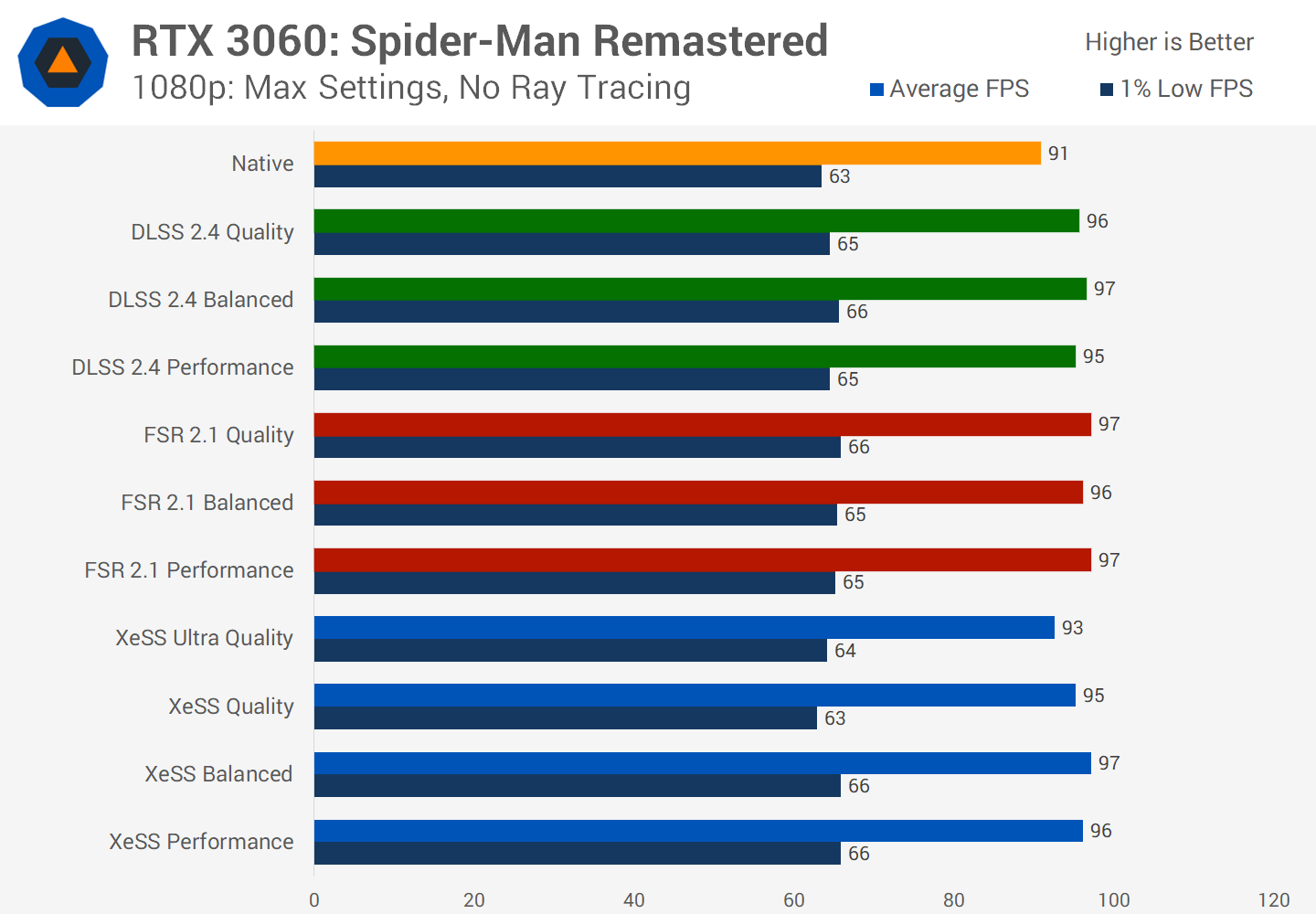

In Spider-Man Remastered we're back on the RTX 3060 to look at 4K performance using max settings without ray tracing. This is a perfect candidate for upscaling as the game only ran at 43 FPS on average natively, so upscaling is a great choice to hit 60 FPS.

However like in Hitman 3, XeSS is a notch below DLSS and FSR in the performance uplift it brings. DLSS/FSR Quality mode is only equivalent in performance to XeSS Balanced, while DLSS/FSR Balanced equals XeSS Performance. At 4K on this Nvidia GPU the Ultra Quality mode essentially delivered the same performance as native rendering.

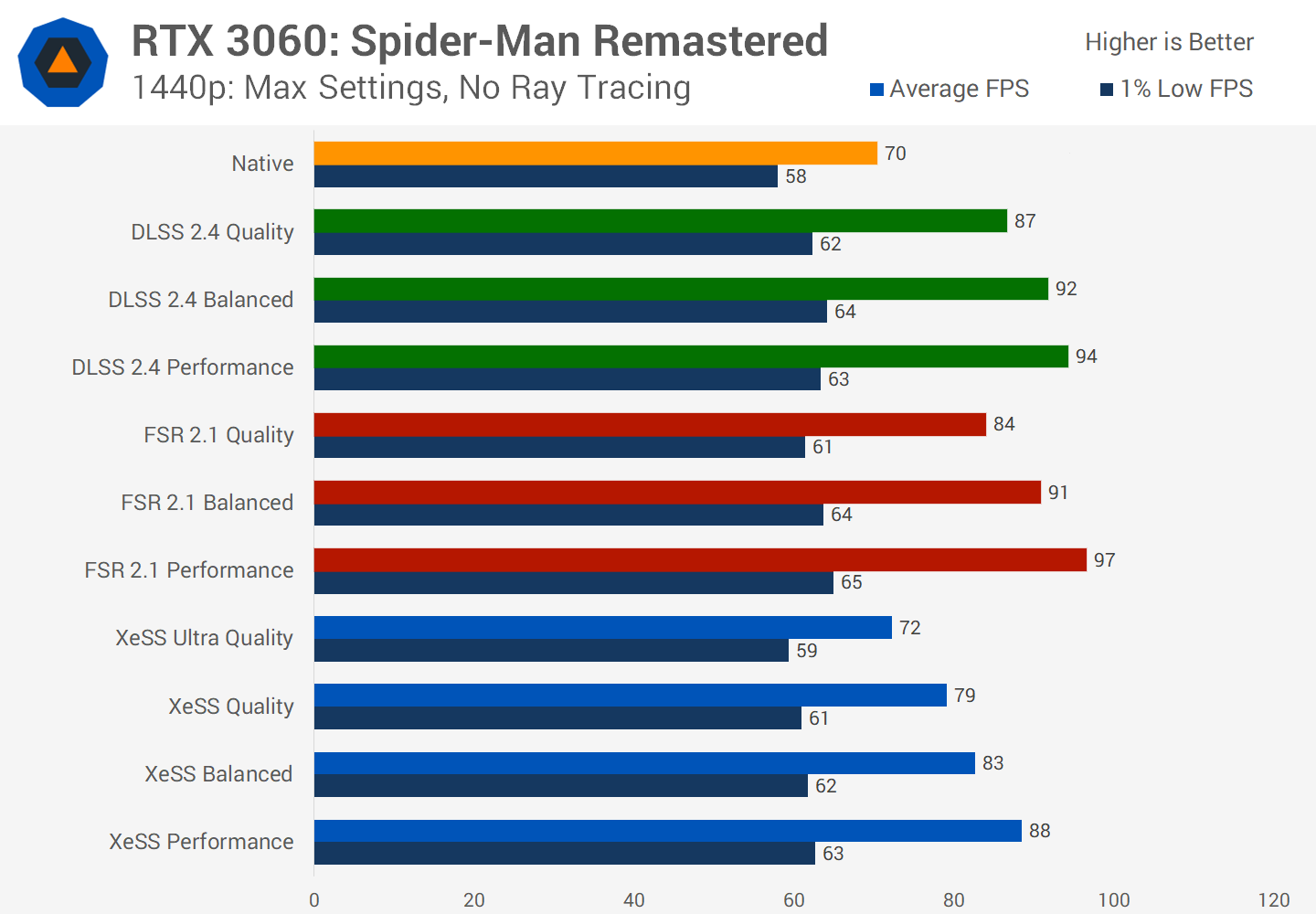

At 1440p the results are more dire for Intel's upscaler. At this resolution in Spider-Man only the XeSS Performance option was able to match DLSS's Quality mode, while XeSS Balanced matched FSR Quality.

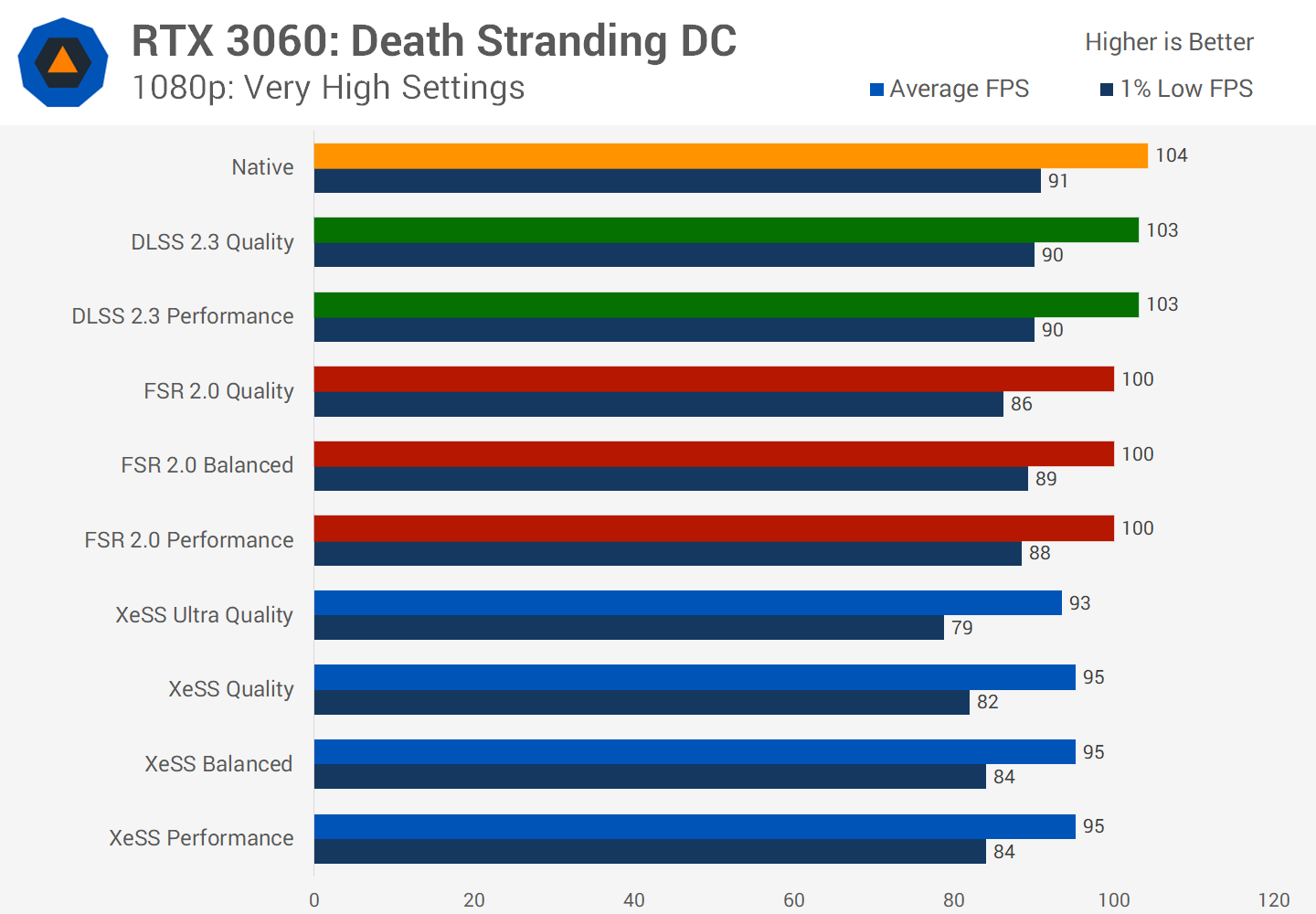

While XeSS Quality and Balanced did provide an uplift compared to native rendering, it just wasn't that impressive compared to DLSS. Meanwhile at 1080p in this title upscaling is not effective, at least on the Ryzen 9 5950X.

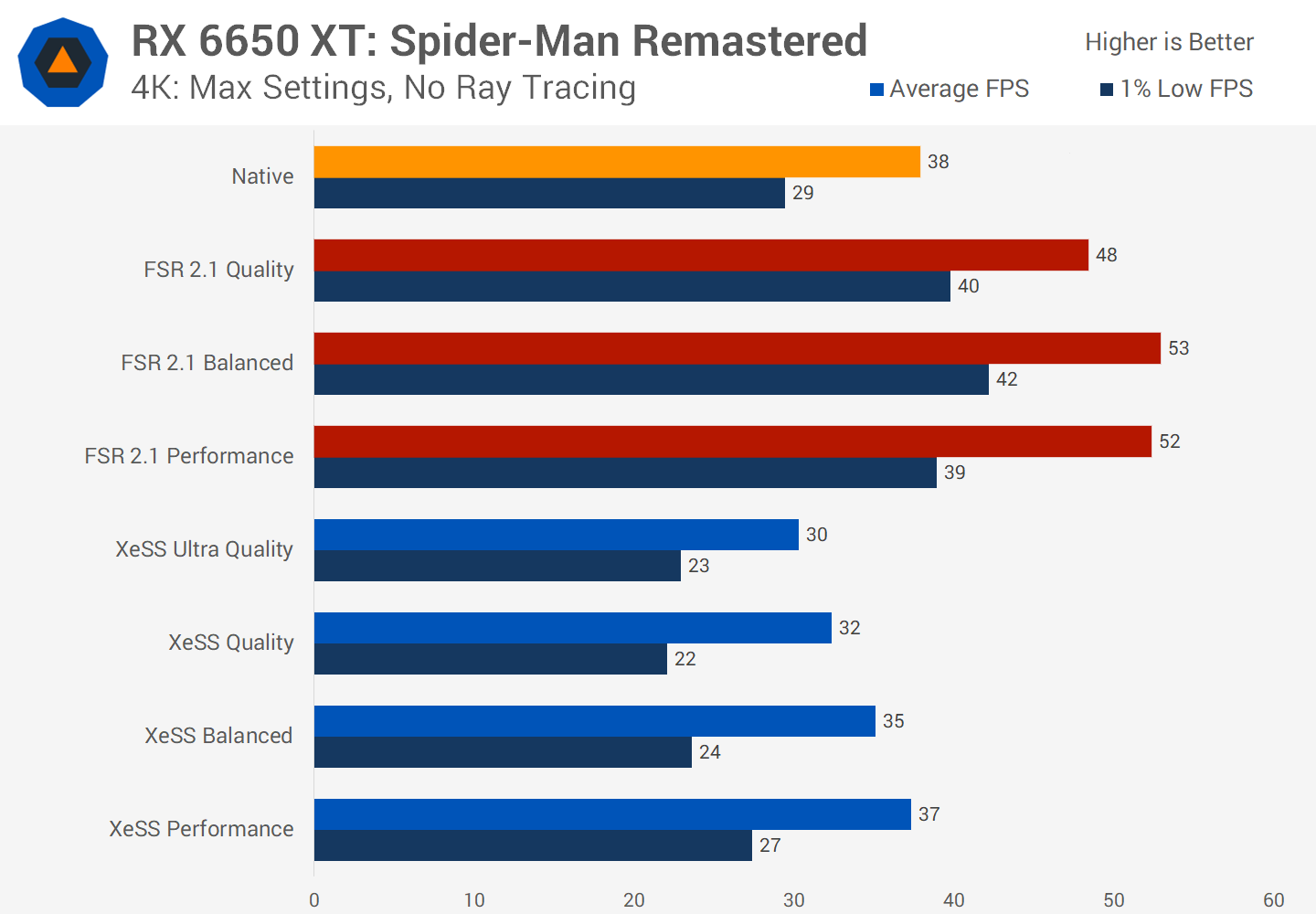

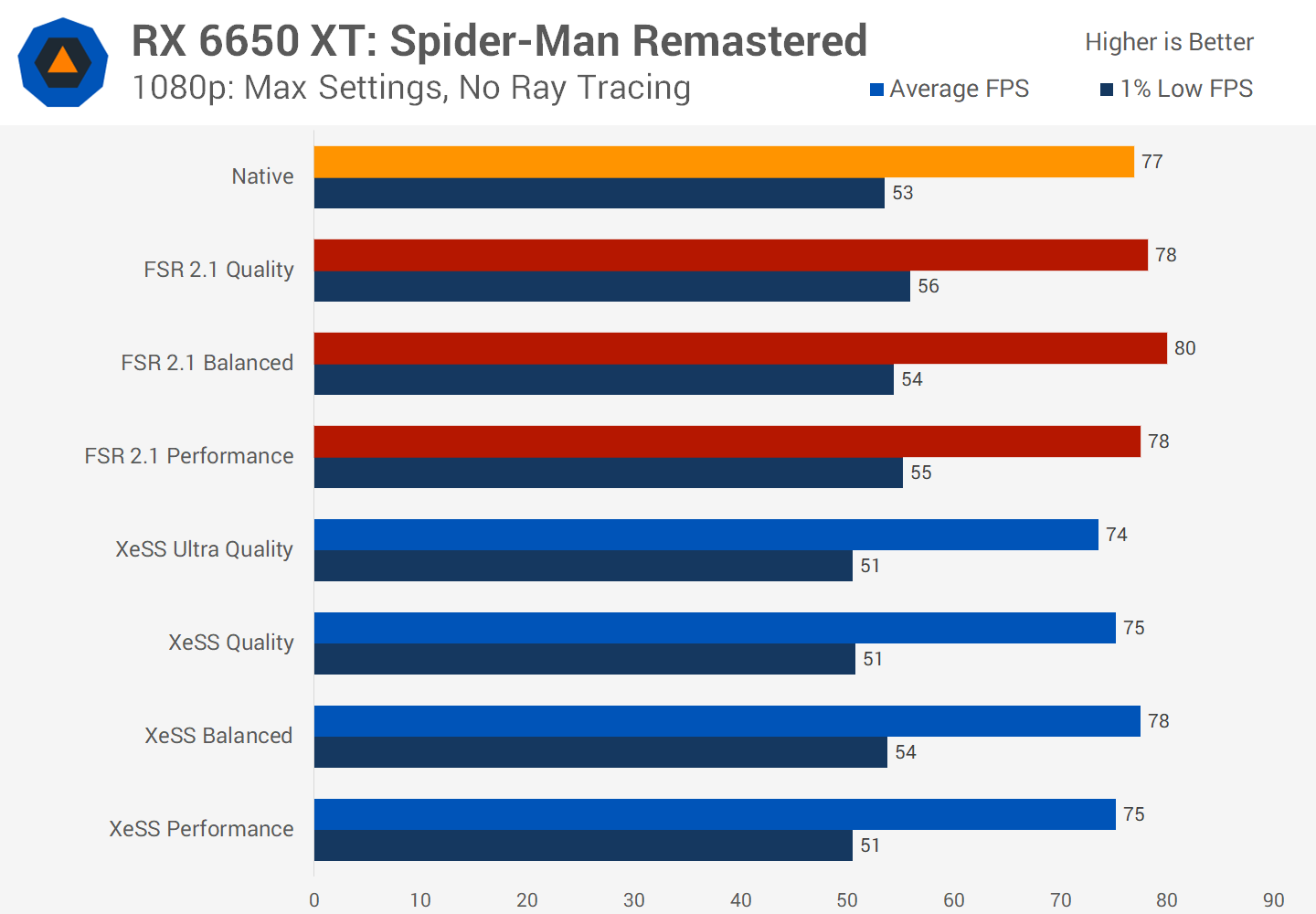

In Spider-Man, XeSS is disastrous on the RX 6650 XT at 4K. All quality settings delivered worse than native performance, although the Performance mode did roughly match that of native rendering.

For some reason XeSS really choked up on this GPU, and the results surprised me a bit given at least a few options were viable in Hitman 3. Unfortunately though FSR is simply far better at delivering a performance uplift here, with its Quality mode delivering a 26% performance improvement, compared to a 21 percent performance regression using XeSS Quality.

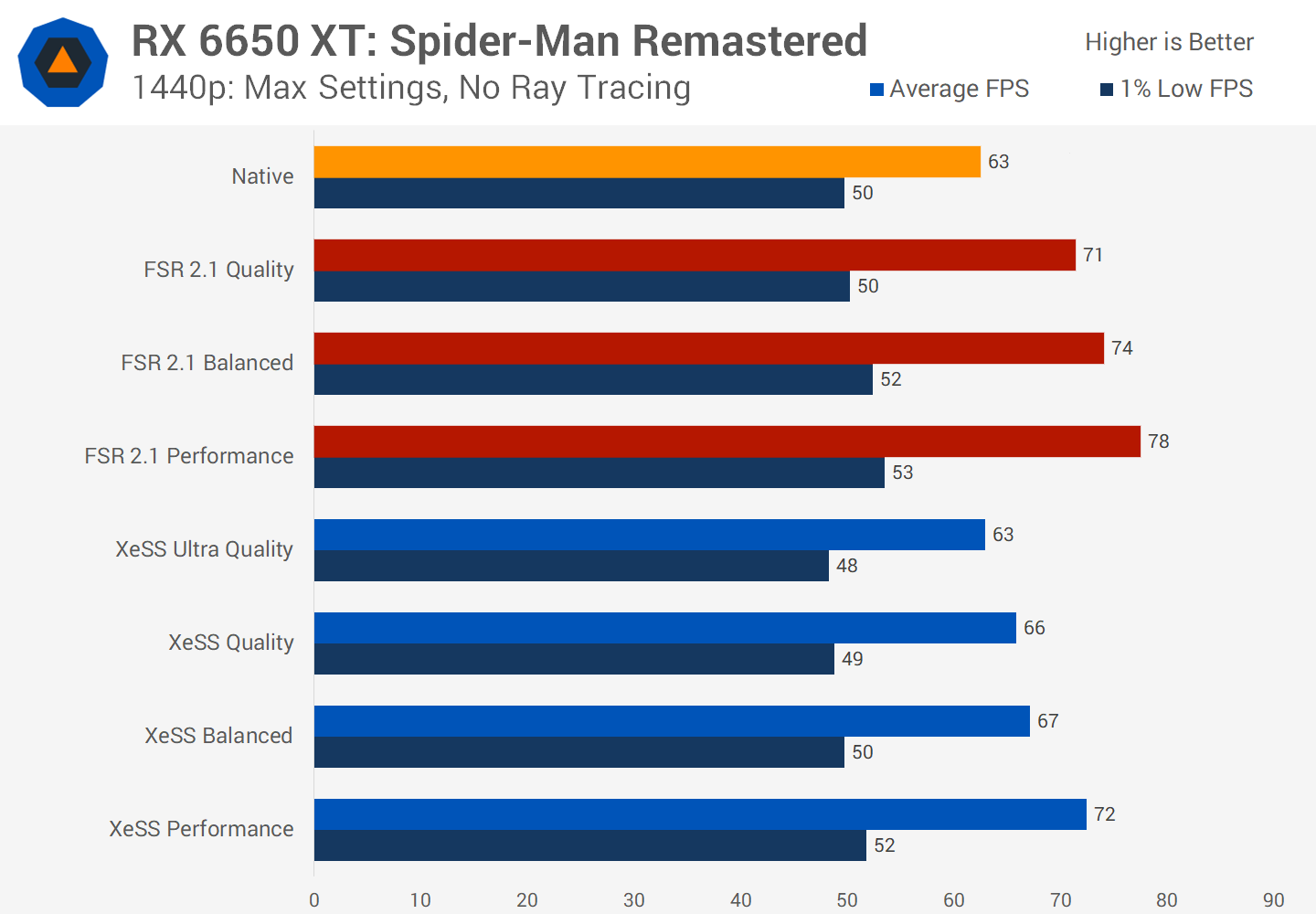

Thankfully, XeSS is more effective at 1440p on the 6650 XT. While the Performance mode is only able to match FSR Quality, at least the settings from Quality and lower do provide a performance benefit relative to native rendering, and it's only the Ultra Quality mode that sees no benefit. Similar to the RTX 3060, there's no point using upscaling at 1080p in this title.

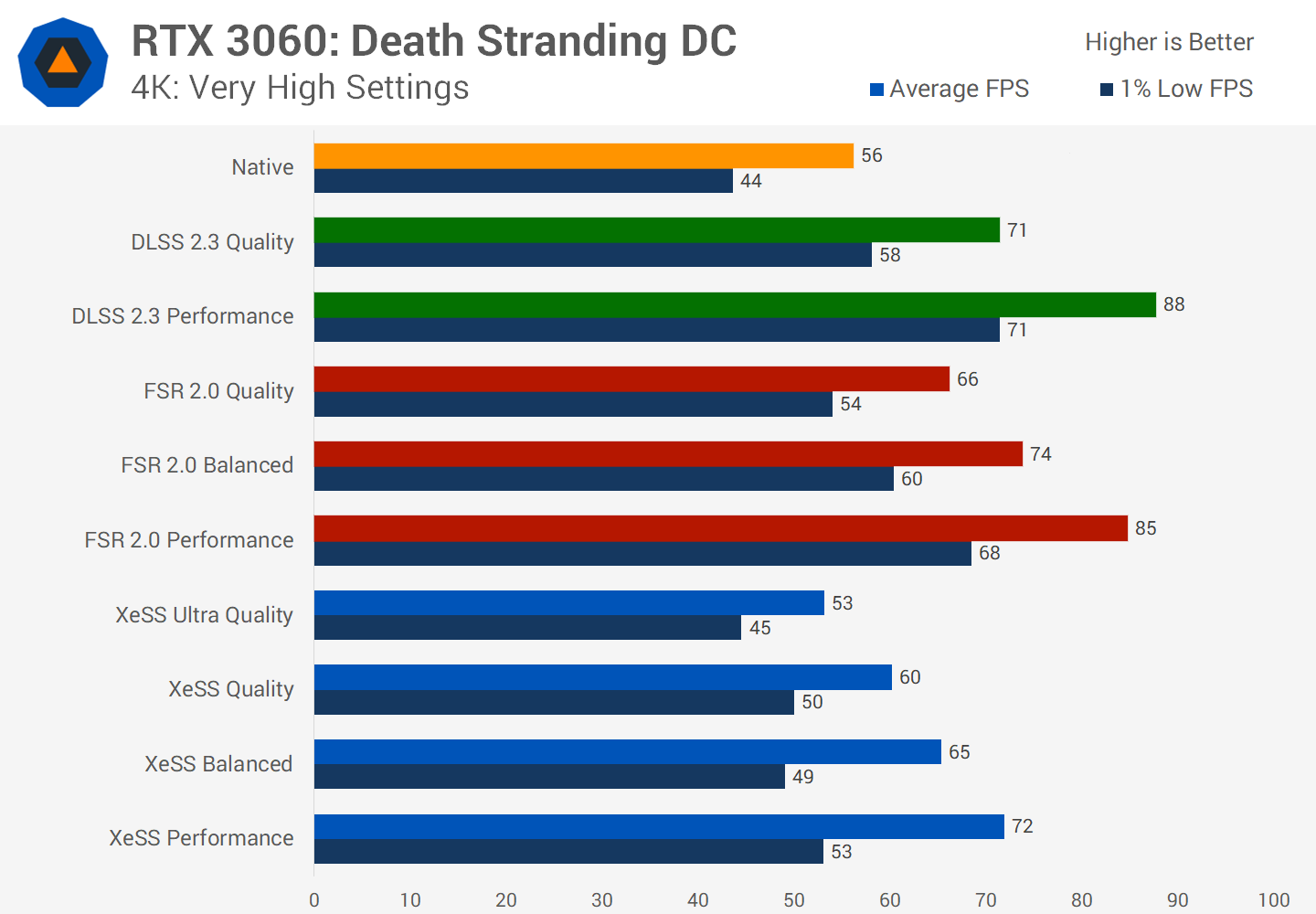

Death Stranding Director's Cut Performance

The third game we are looking at today is Death Stranding Director's Cut - and the reason for these choices is that these are the three main games that offer DLSS 2, FSR 2 and XeSS upscaling.

In this title XeSS doesn't look so flash even on the RTX 3060. At 4K using Very High settings XeSS Performance mode was the only setting to offer comparable performance to DLSS Quality, while XeSS Balanced matched up with FSR Quality. The Ultra Quality mode also saw a performance regression compared to native rendering and to a greater extent than what we saw in Hitman 3.

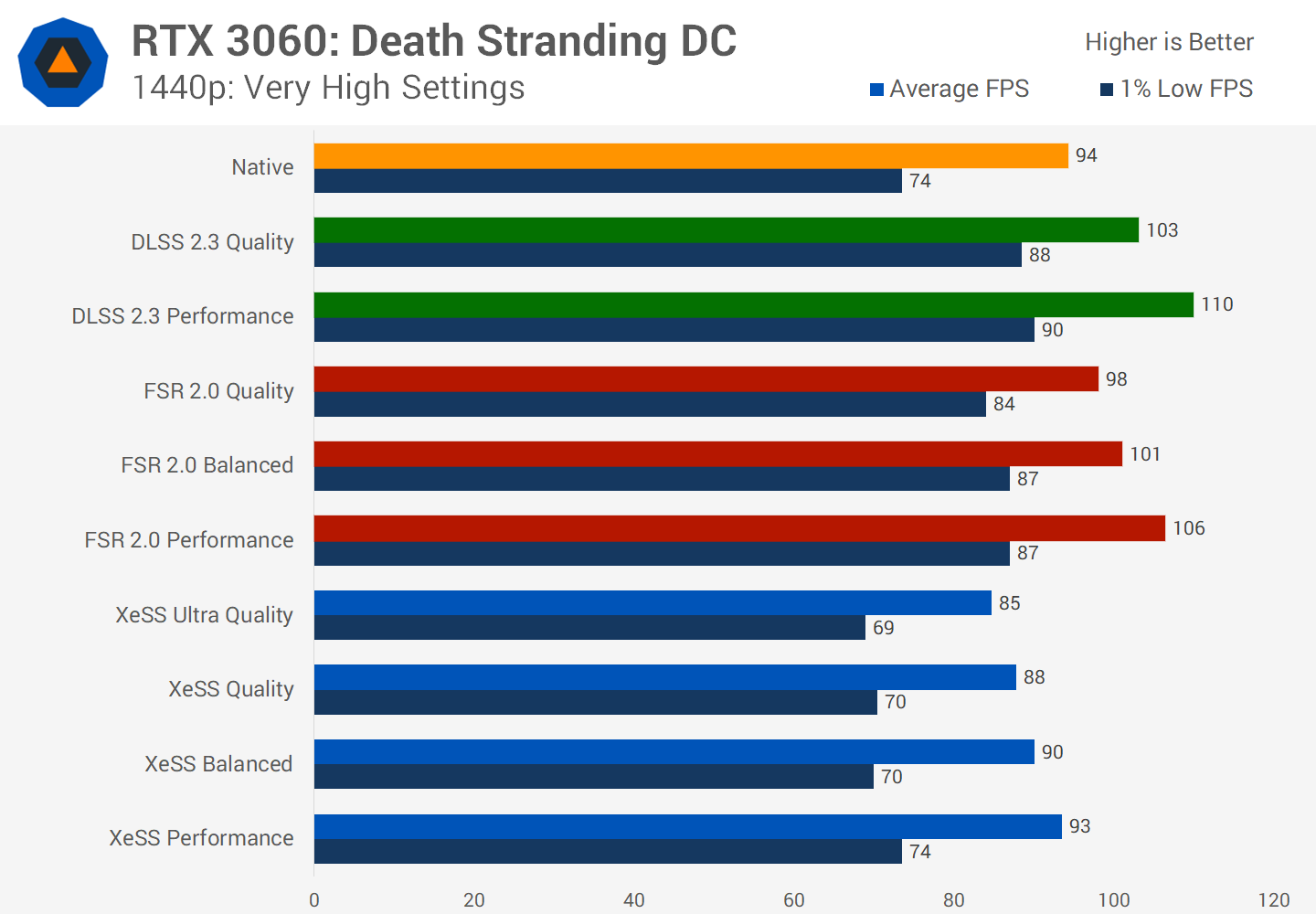

Meanwhile, at 1440p, XeSS is functionally useless on the RTX 3060. The Performance mode here is only able to match native rendering, while both DLSS and FSR provide a performance uplift, not a substantial uplift by any means, but at least some sort of increase.

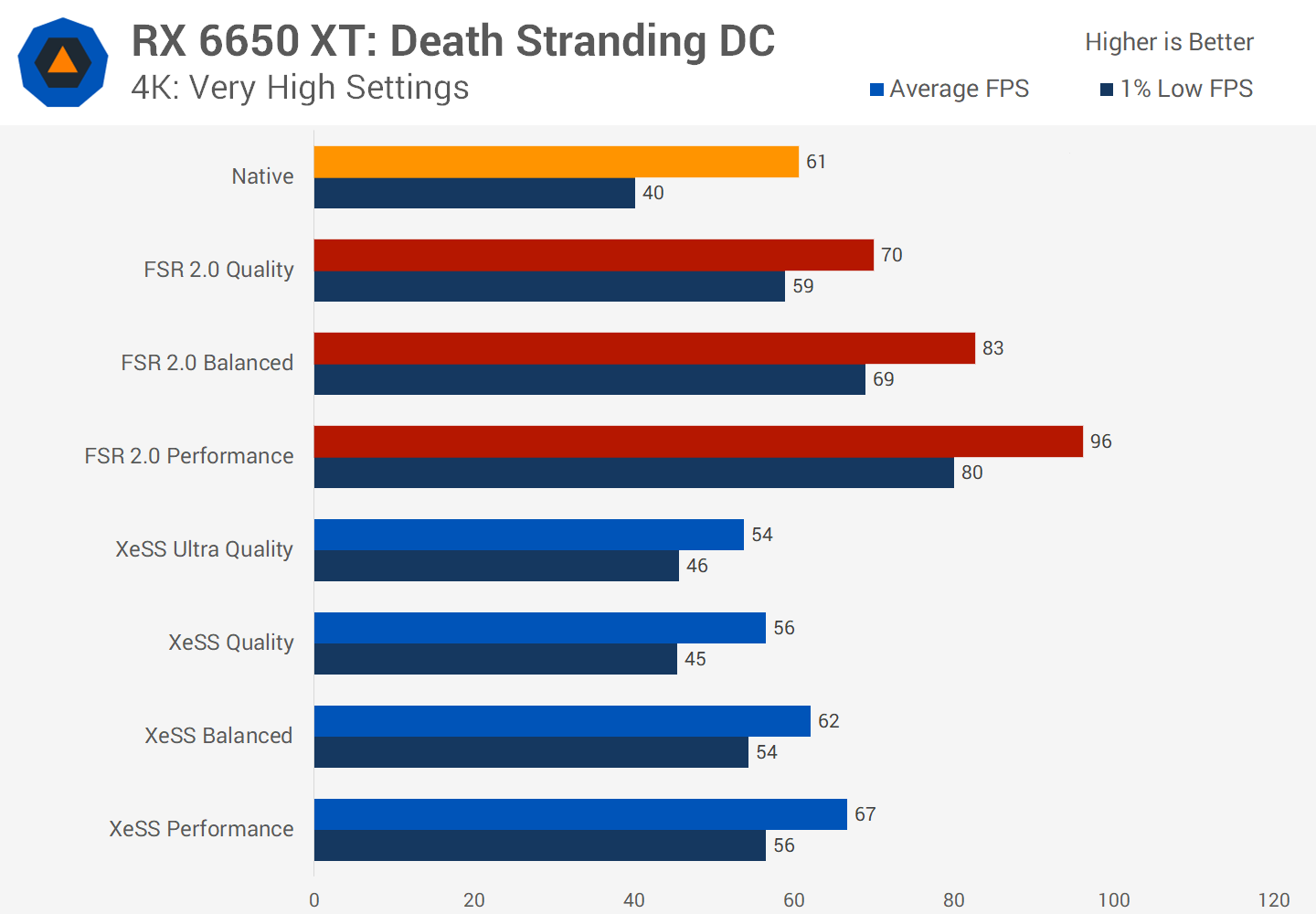

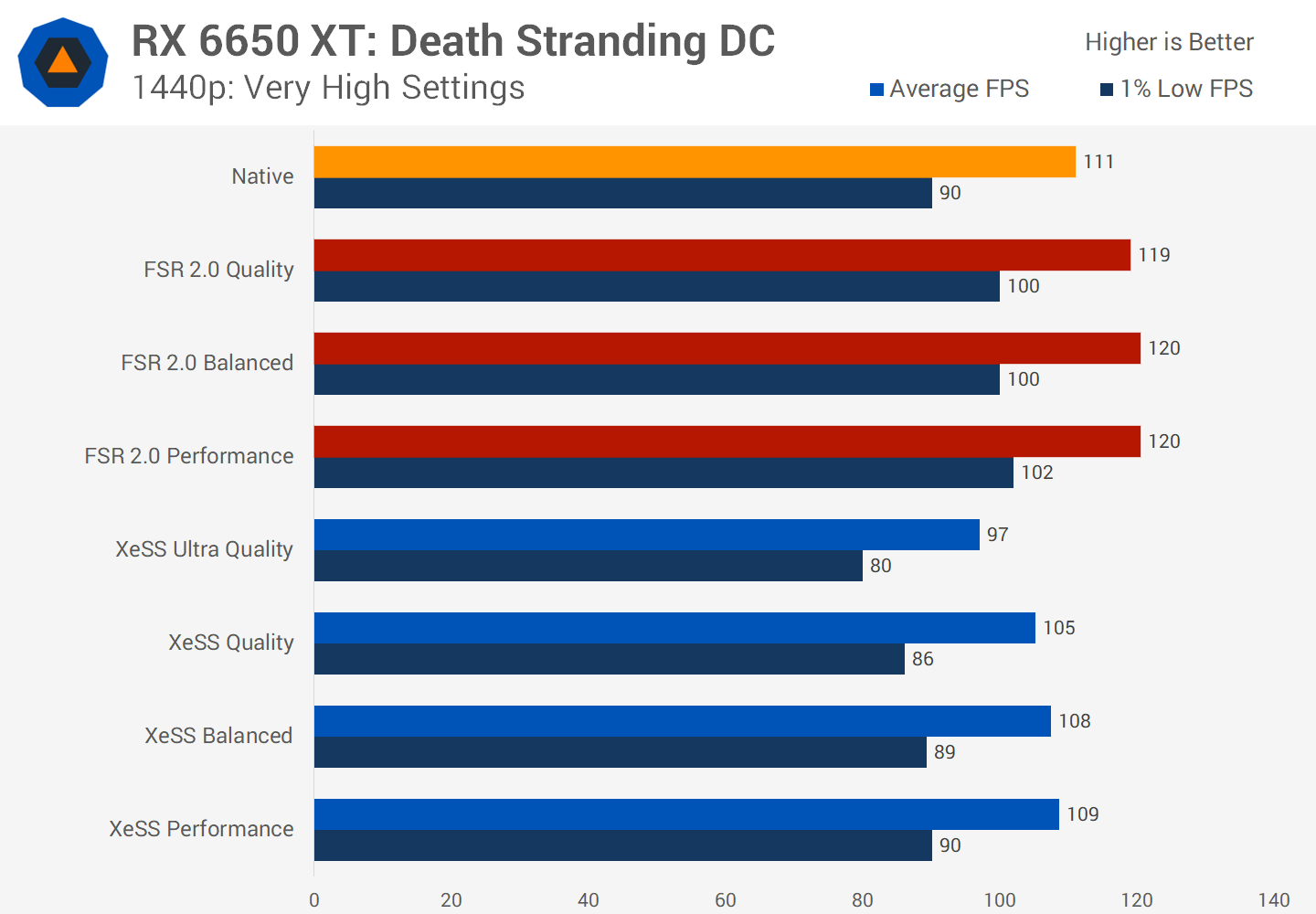

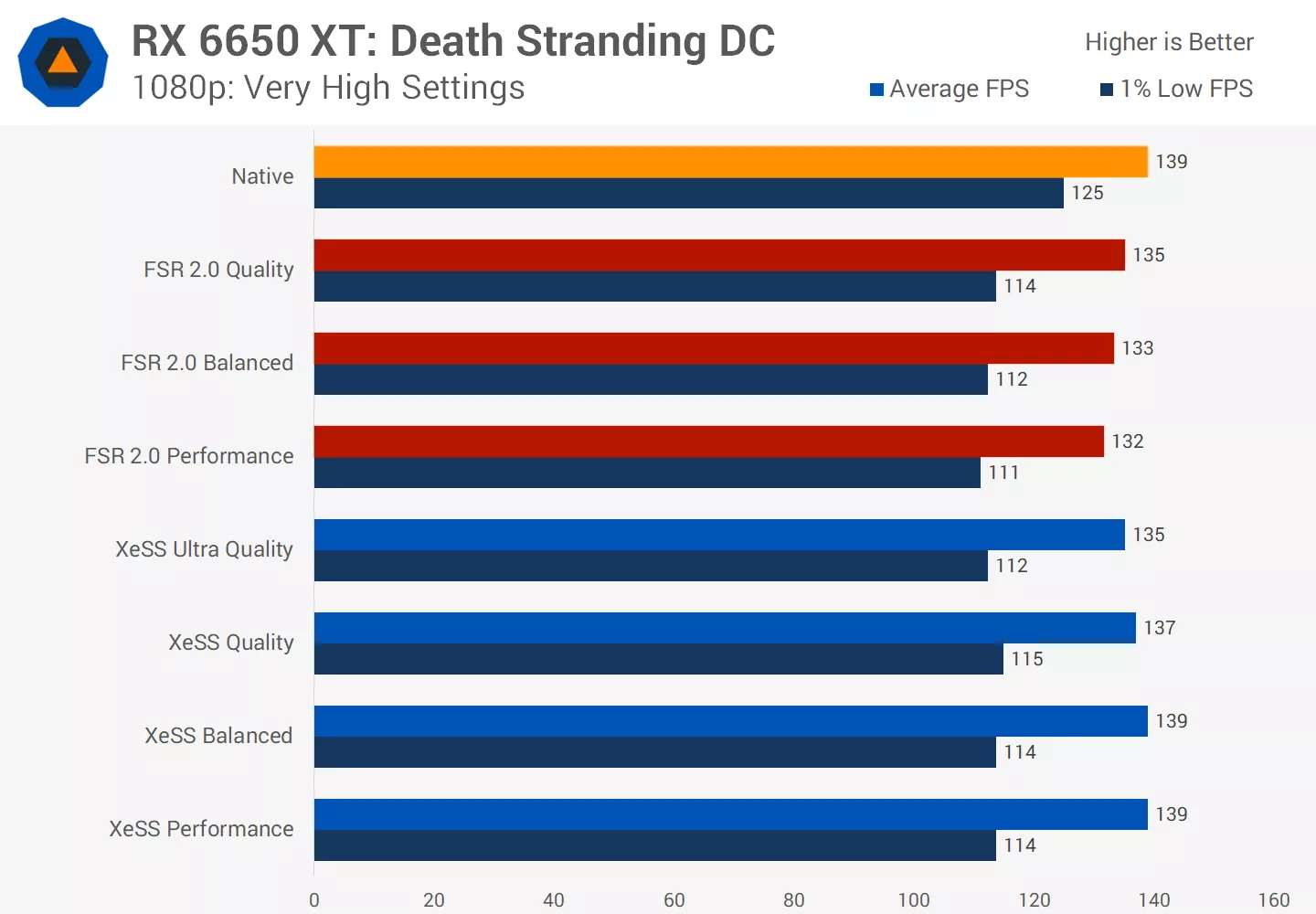

On the RX 6650 XT, XeSS in Death Stranding is a bit better at 4K relative to Spider-Man. However XeSS still runs slower than on the RTX 3060 relative to FSR. Here on AMD's RDNA2 GPU, XeSS Balanced was only able to match native rendering, with both the Ultra Quality and Quality modes providing a performance regression. Meanwhile FSR 2's Quality setting is able to outperform XeSS Performance.

At 1440p both FSR and XeSS do hit a wall in performance, FSR providing about 120 FPS while XeSS delivers less than 110 FPS. I think this illustrates well that XeSS is a more intensive upscaling technique on GPU hardware, so whenever these sorts of walls are hit, the overhead of XeSS tends to cost gamers more performance than using the comparatively lighter weight FSR algorithm. In this situation, FSR provides a performance uplift, while XeSS sees performance go backwards slightly.

The performance data we've gathered is pretty illuminating into how XeSS runs on Nvidia and AMD hardware and we certainly weren't expecting such a discrepancy between the two main GPU brands.

Even though the RX 6650 XT offered better native performance in two of the three games, XeSS consistently ran worse on RDNA2 than Ampere and delivered a lower performance uplift than FSR 2, in some cases it was basically useless as all modes offered lower FPS. XeSS ran okay on the RTX 3060, but wasn't able to match either DLSS or FSR at the same render resolution.

Upscaling: Nvidia Configuration

This makes visual quality comparisons a little bit trickier than we were expecting.

On Nvidia hardware, if we normalize for performance, the matchups are typically as follows: XeSS Ultra Quality vs Native, XeSS Balanced vs DLSS and FSR Quality, and XeSS Performance vs DLSS and FSR Balanced. However, XeSS in Death Stranding runs worse than this, so those are almost a best-case scenario.

Upscaling: AMD Configuration

On AMD hardware performance was more variable, though typically XeSS Performance matched up against FSR Quality. In some instances XeSS didn't even match native rendering though, so that's something we'll have to keep track of when exploring the visual differences - let's get into them now.

Hitman 3 Image Quality

Our first visual match-up is Hitman 3 at 4K with an Nvidia performance normalized battle pitting DLSS Quality and FSR Quality up against XeSS Balanced, showing the opening cinematic from the Dubai level.

There's no sharpening slider here, and DLSS uses the most aggressive sharpening of the three, so its image tends to look the sharpest. DLSS also has the best image stability and best reconstruction of fine details, although at times FSR 2 is slightly better for reducing aliasing on fine wire details.

For a better representation of image quality comparisons, check out the Hardware Unboxed video below:

At times XeSS looks okay, and can deliver better fine detail reconstruction than FSR that's closer to DLSS. However, this is offset by lower image stability and in particular, visual artifacts that are noticeable without zooming in. XeSS doesn't deal with volumetric lighting as well as FSR or particularly DLSS, and in this final scene there's some really horrible flickering with XeSS that aren't present with either of the other reconstruction techniques.

On AMD GPUs we have to compare XeSS Performance to FSR Quality in this title to get an accurate look at performance normalized visuals. This further hurts XeSS compared to what we saw in the Nvidia comparison: the XeSS Performance mode isn't very good and exacerbates any flickering and image instability we saw previously. This is clear right from the early parts of this cinematic where you'll see reflections flicker, and any time I found an issue with the FSR image (like aliased fine details), typically that issue was worse with XeSS.

Even when we put XeSS up against FSR at the same quality setting, in this case the Quality mode both rendering using a 1.5x scale factor, XeSS isn't always better. It does have better image stability at times and better fine detail reconstruction, but it still suffers from jarring artefacts that FSR 2 just doesn't have. Considering it also runs worse in this configuration on both the RTX 3060 and RX 6650 XT, it's hard to recommend.

The next scene is Hitman 3 gameplay with FSR and DLSS Quality modes up against XeSS. This alley has a lot of fine detailed elements like wires, mesh and cages, which all favor DLSS reconstruction. Not only is the DLSS image sharper – perhaps too sharp – but it deals with all of these fine details the best of any technique. Clearer lines, less flickering, better image stability.

XeSS vs FSR is a more interesting battle, with the image quality trading blows depending on where you look. Despite rendering at a lower resolution, XeSS does a better job of reconstructing some fine elements. There's more rain present for example, which looks more like the DLSS image. However, there are clearly some issues with how XeSS is handling the reflections with distracting flickering and a low resolution appearance. FSR is doing a much better job here of delivering a like-native presentation, similar to what DLSS is doing.

When viewing the differences in an AMD performance normalized condition, XeSS suffers badly from having to drop the quality setting down to Performance mode, which matches FSR 2 Quality mode. All the artifacts we've been talking about are amplified, and more are introduced. For example, I no longer believe XeSS in this configuration is as good as FSR at reconstructing fine details, with more shimmering noticeable in the XeSS image.

Also, looking at the Dartmoor benchmark which shows off particle effects, DLSS 2 has the best image quality again, but I think XeSS Balanced does a decent job of reconstructing all the particles with less sizzling and a higher level of quality than FSR 2's Quality mode. I don't think FSR looks terrible or anything, but in my opinion quality was noticeably lower than XeSS in this scenario. This is also true in our AMD configuration pitting FSR Quality against XeSS Performance, the particles still look decent on the XeSS side, but when looking at other areas the image quality issues with the Performance mode are noticeable once more.

One final example in the video above shows an area where XeSS outperforms both DLSS and FSR visually, and that's in the laser show from the Hitman 3 Berlin level. XeSS does a better job of reconstructing the moving laser beams than either of its competing technologies despite a lower render resolution used for this performance normalized comparison. The XeSS lasers look like nice consistent beams, while the DLSS lasers are very aliased, and the FSR lasers are blurred and less visible. A good result for Intel's upscaling technology.

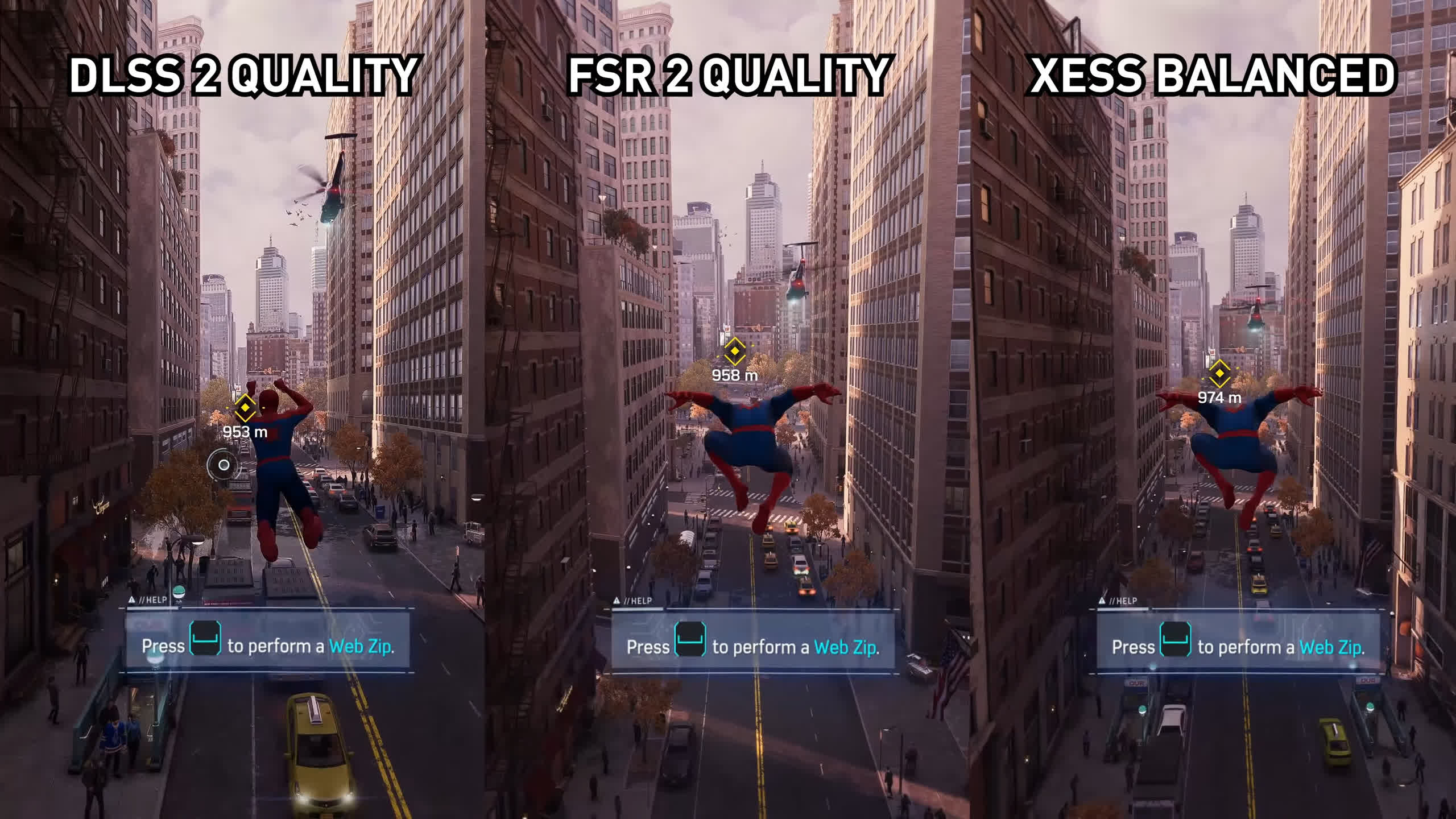

Spider-Man Remastered Image Quality

Moving over to Spider-Man Remastered, we'll start with a walking comparison using our Nvidia configuration. Lots of foliage in this scene and honestly it's pretty hard to spot any major issues with any of the rendering techniques.

For the FSR and DLSS images we had sharpening set to 5, which ended up making DLSS look a bit sharper and perhaps too sharp. Meanwhile XeSS doesn't allow sharpening adjustment and looks the softest as a result. DLSS had the most stable image with the least flickering and aliasing, though this wasn't much of a concern with FSR or XeSS either.

For a better representation of image quality comparisons, check out the Hardware Unboxed video below:

For our AMD configuration we've included three visual options: native, FSR Quality and XeSS Performance. This is because on the 6650 XT at 4K, XeSS ran similarly to native performance, but we're also interested in seeing how it compares to FSR using its highest quality mode, which also runs the best out of these three options.

Native clearly looks the best, although FSR Quality looks respectable given it delivers higher performance. However, it's hard to make a case for using XeSS Performance mode here. Not only is the image less detailed, it's also less stable with weaker fine detail reconstruction and occasional flickering. We're not seeing artifacts that dominate the screen, but we wouldn't choose to use XeSS in this configuration.

Out of interest we swapped out the FSR Quality footage for FSR Performance, giving us a look at FSR vs XeSS both at a 1080p render resolution. It's a much closer battle visually, XeSS has some shimmering issues on fine details, while FSR has some sizzling around Spider-Man.

We think the XeSS footage has a small advantage, but you'd really hope that was the case given FSR ran about 40% faster.

Naturally, a big part of a Spider-Man game is swinging around the city so we took a look at that. You'll have to excuse the syncing of the footage on the video above, it was just very difficult to do that in this gameplay sequence. In our Nvidia configuration, the DLSS image looks the best, no doubt about it.

DLSS has the least ghosting, least shimmering, least sizzling and best image stability. At times it's only a small advantage in favor of Nvidia's reconstruction, but it's something I noticed playing the game.

For the other techniques you can focus on two areas which may not have been apparent on the first playthrough of the footage. The FSR Quality image has trouble reconstructing Spider-Man's swinging web, there's a bit of ghosting and sizzling around that element as the web occludes various objects on the screen. Doesn't look amazing. This isn't as much of an issue with the XeSS image, however XeSS has its own problems. If you focus on the buildings in the background, the XeSS image has noticeable flickering when reconstructing the windows, and while there's a bit of flickering in the FSR presentation as well, it's much more noticeable and jarring on the XeSS side.

In our AMD configuration we put XeSS Performance side by side with FSR Quality, and this may not be a fair comparison as FSR performs better in this configuration at 4K, but it does highlight the issues XeSS has on AMD hardware. The visual quality just isn't great using XeSS in this way and I would choose FSR instead every single time, despite some issues with the FSR presentation.

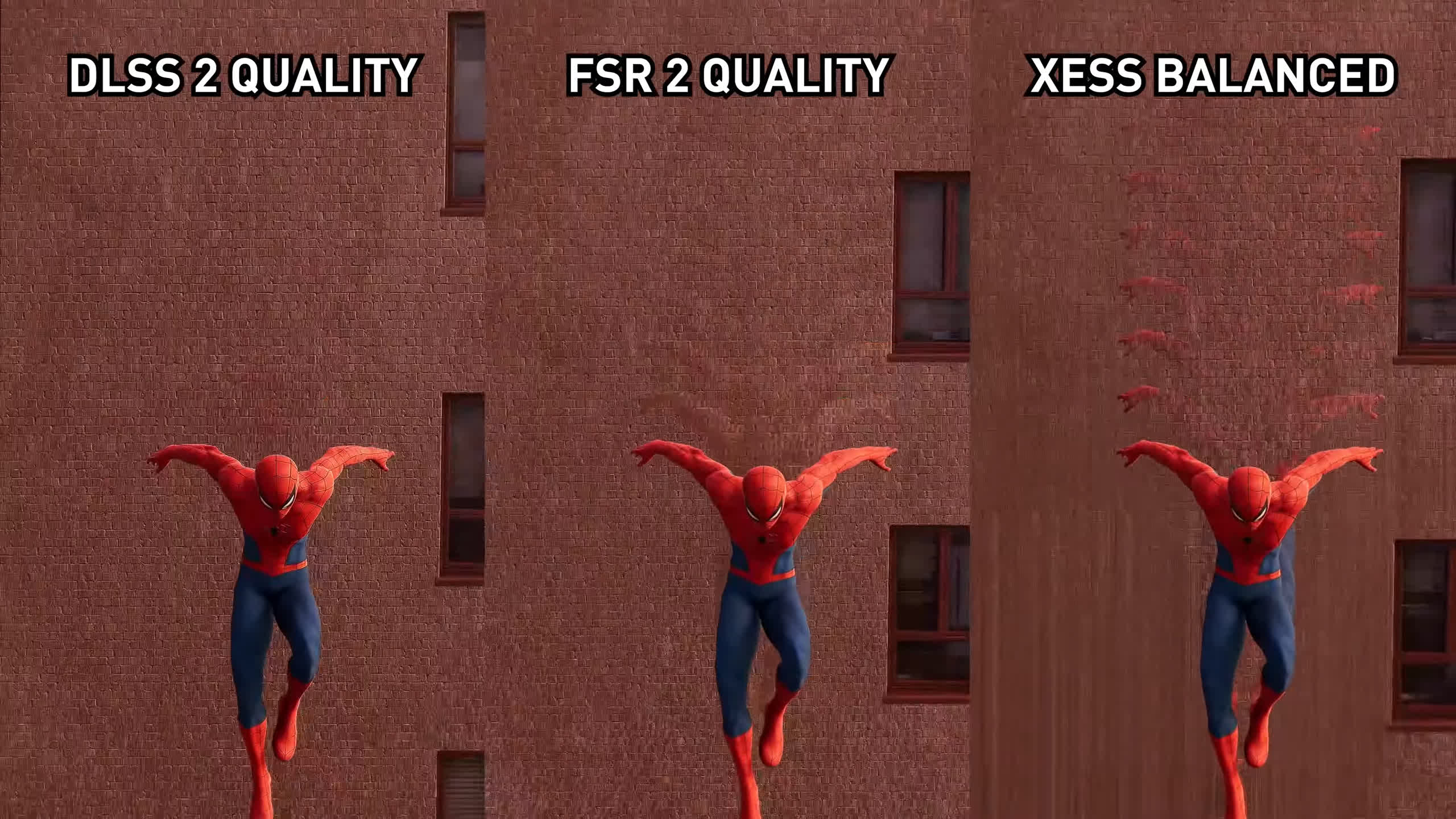

Image stability is an issue with XeSS even when you're not moving. Take this rooftop scene. In our Nvidia performance normalized configuration, XeSS shows clear flickering and issues without any movement on screen at all. DLSS is very stable and looks excellent, FSR has some minor stability problems for fine details, while XeSS looks pretty bad. It's only when we swap out the XeSS Balanced footage for XeSS Quality does image stability improve to a similar level to FSR, so with all rendering at the same base resolution.

However if we then normalize for performance on an AMD system and compare FSR Quality to XeSS Performance it's once again not a good showing for Intel's technology and really it's the slow performance of XeSS on RDNA2 that kills its usability. Were XeSS and FSR able to deliver similar levels of performance at each respective quality level, XeSS would be much more usable. But performance normalized FSR is definitely the way to go.

XeSS also looks the worst when we have all three techniques using their Performance mode. DLSS is clearly the most stable and looks the best, no motion in Performance mode is one of the strengths of DLSS. FSR is a bit unstable, not ideal considering image quality is reduced relative to the Quality mode without any motion. XeSS has the least image stability and the most flickering.

Our final look at Spider-Man is climbing this brick wall which is an excellent test for ghosting. In our Nvidia performance normalized configuration it's pretty obvious that DLSS is handling motion the best, with the least amount of ghosting. There is still a small amount of ghosting, but it's difficult to spot in real gameplay using the Quality mode. FSR and XeSS on the other hand are... not great.

Especially when we jump down from the top of the building, FSR has moderate ghosting that's clearly visible in real time, while XeSS has severe ghosting that looks absolutely atrocious. When we slow down the footage you can see that not only does the XeSS image have a huge ghost trail, the brick texture work is blurred, too. XeSS struggles with this and simply doesn't have the optimizations that DLSS has.

This scene was perhaps the most noticeable difference we'd seen comparing XeSS to FSR and DLSS in Spider-Man and it crops up in many places that aren't just this specific example. Both AMD and Intel need to put in work to reduce ghosting in these situations.

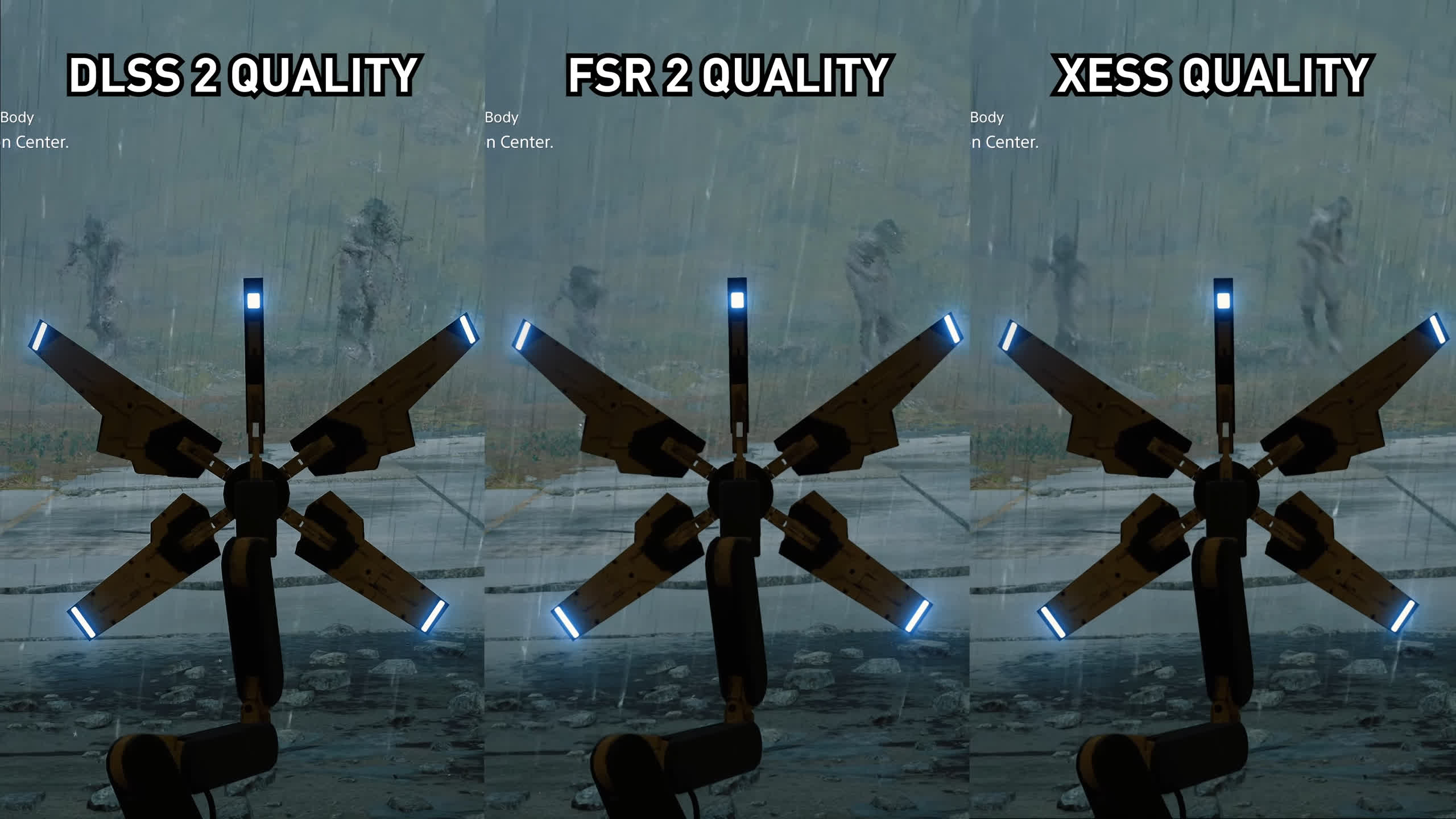

Death Stranding Image Quality

Death Stranding presented the worst performance for XeSS relative to DLSS and FSR of the three games we looked at. In this game, when performance normalizing for an Nvidia configuration, we have to compare DLSS Quality to FSR Balanced and XeSS Performance, which is not very favorable to XeSS...

For a better representation of image quality comparisons, check out the Hardware Unboxed video below:

This game is a great showing for DLSS as on the RTX 3060 it had a clear performance lead, which allows it to be run at a higher render resolution in these performance normalized comparisons. Everything we've been saying about DLSS throughout the article is true in Death Stranding as well.

Better image clarity and stability, which depending on where you look ranges from a minor to moderate advantage. Overall stability wasn't significantly better than FSR at times, but there was a large difference compared to XeSS which had to be run in its Performance mode to achieve a similar frame rate.

As we've shown several times now, the XeSS Performance mode is not very good and I wouldn't recommend using it as it produces a lot of artifacts. Not only that, but in this game XeSS Performance appears to reduce the quality of some textures relative to the other modes as well, which may indicate an issue with the way the technology has been implemented.

However it's not all bad for XeSS. FSR is also not great in Death Stranding, even though the Director's Cut provides FSR 2. There is lots of ghosting present in the FSR image that isn't visible in either DLSS or XeSS. Unlike in Spider-Man, where XeSS had the most severe ghosting, it's FSR with the problematic ghosting in this title. Even in these comparisons though, XeSS can't match DLSS.

Normalizing performance on an AMD system pits FSR Quality mode up against XeSS Performance mode once again, and honestly I'm not a huge fan of either presentation. XeSS is the worst of the two with noticeable artifacts and image stability issues across the whole screen, but I also found quite annoying FSR 2's ghosting. In this game you should be trying to run at native resolution, which is pretty achievable even using modest hardware.

One last visual comparison was looking at Death Stranding's BTs, which have a lot of particle effects and movement. Here we normalized for quality settings, so we're looking at DLSS Quality vs FSR Quality vs XeSS Quality all rendering at the same base resolution. A best case scenario for XeSS given it runs the slowest here on both AMD and Nvidia hardware.

DLSS once again looks the best of the three reconstruction techniques, it handles this effect really well so we end up with a nice amount of particles and minimal distortion. FSR does okay but it's not amazing and inferior to the DLSS presentation, some particles are preserved but there's some artifacting as well. XeSS clearly looks the worst. Intel's reconstruction is blurring the BTs and removing a significant number of particles, and that's despite using the Quality mode.

What We Learned

After all that image quality and performance analysis, what's to make of Intel's Xe Super Sampling? For those of you who have Nvidia or AMD GPUs, which today make up the vast majority of desktop PC gamers, there's no reason to use XeSS at all.

Intel's upscaling technology performs worse than Nvidia's DLSS 2 and AMD's FSR 2 at the same render resolution, and in most situations it produces worse visual quality as well. It's a bit of a brutal double whammy, which is disappointing given what the technology was promising to do.

For those with an Nvidia RTX GPU, there is categorically no reason to use XeSS when other techniques are available. DLSS is the obvious choice, it delivers the best performance uplift across the games we tested, and the best visual quality. DLSS 2 is in a really nice spot in terms of optimization, we noticed the least amount of visual artifacts using this technology, so it's a no-brainer for Nvidia RTX owners: DLSS is the way to go if you want a performance boost.

For those who don't have an RTX GPU, whether you're an AMD Radeon owner or a GeForce GTX owner, there's also little reason to use XeSS. On RDNA2 hardware as an example, XeSS runs badly and is at a disadvantage up against FSR 2. It's common for XeSS to reduce performance relative to native rendering using the Quality or Ultra Quality modes, leaving performance parity with FSR 2 in a bad spot where the Performance mode only matches FSR 2's Quality mode. Under these circumstances, XeSS looks worse than FSR most of the time.

But even if we used the same quality settings across DLSS 2, FSR 2 and XeSS, I'd often place XeSS in third place for visual quality. Intel's upscaler has issues with visual stability and artifacts that are less prominent with FSR, and basically a non-issue with DLSS. XeSS does handle fine detail reconstruction better than FSR at times, and the overall presentation is much better than DLSS 1.0 as an example, but too often my gaming experience was impeded with distracting temporal issues. It's just not good enough to have more visual issues and worse performance than other options available to gamers when making an upscaler that's available to all GPU owners.

Because XeSS isn't worth using on AMD and Nvidia GPUs, it's also not worth integrating into games. Developers would be better placed spending their time elsewhere, whether that's optimizing the DLSS and FSR implementations, or just working on something else. Even if XeSS did work better on Intel GPUs - something we'll be exploring soon - the user base for Intel Arc is so small that it's not worth considering. To make matters worse, Intel are yet to convince us their Arc GPUs are worth buying, with average value and driver issues in their first-gen attempt.

We think XeSS also serves as good evidence that integrating AI technology isn't always the savior on its own. At launch, FSR 2.0 was criticized for not using AI tech, instead opting for a "hand-crafted" algorithm and yet FSR 2 is superior to XeSS on the majority of GPU hardware right now.

If you want to use AI to enhance upscaling, it needs to be combined with an excellent algorithm and plenty of optimization, which is what Nvidia has done with DLSS 2. Simply training and integrating a neural network isn't sufficient, especially if that neural network slows the algorithm.

What can Intel do to improve XeSS and make it worth using? Well, the big one is resolving visual artifacts. Intel needs to stamp out flickering and ghosting, and produce a much more stable presentation, especially using the lower quality settings. On top of that, Intel needs to significantly improve performance on Nvidia and AMD hardware, so that XeSS performs as well as FSR 2. If XeSS is to survive as a technology it needs to offer something better than FSR 2, and right now they are behind in both key areas. Only after matching FSR 2 should Intel begin thinking of tackling DLSS 2 because right now they aren't too close to Nvidia's AI upscaling technology.

At the end of this testing we've come away thinking XeSS is a bit of a fail. We understand that these days companies need a strong feature set to sell their GPUs and that XeSS was announced before AMD's FSR 2... but the end result has turned out a bit pointless. FSR 2 is already playing the role that XeSS was set to fill, putting Intel behind the 8-ball. Hopefully they can improve XeSS to the point where it's a really strong competitor, but there's a bit of work that needs to be done, that's for sure.