The Top Five Things You Need To Know About How Generative AI Is Used In Security Tools

We just released a huge new report, How Security Tools Will Leverage Generative AI, a collaborative effort across the entire Forrester security and risk team. This report looks at how generative AI will affect six different security domains: detection and response, Zero Trust, security leadership, product security, privacy and data protection, and risk and compliance.

There’s a lot of conversation around generative AI, but it’s still a mystery to many. According to Forrester data, AI decision-makers believe that more than any other department in the enterprise, IT operations will be affected the most by generative AI — and that includes security. Security leaders need to be prepared for this new technology to affect how their teams operate. While the report goes into much more detail, below are the top five highlights that you need to know about how generative AI is used in security tools.

- Generative AI is not ready for primetime (yet).

Despite vendor press releases, this technology is currently available only to a select set of customers, if at all. Every vendor we mentioned in the report (and have spoken with) has at least a press release related to the generative AI offering that it’s building, but none are generally available, or likely to be generally available, before the first half of 2024.

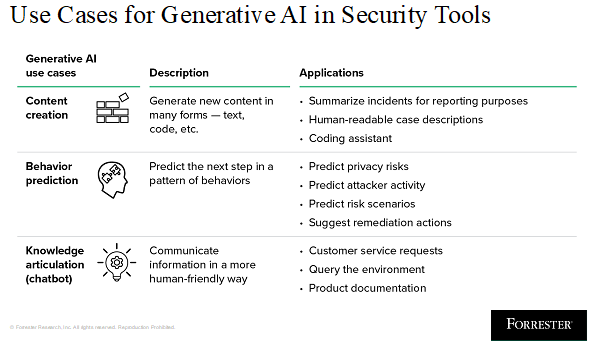

- Generative AI breaks down into three core use cases.

The three core use cases for generative AI in security are:

-

- Content creation. Generate many forms of content (e.g., text, code, etc.).

- Behavior prediction. Predict the next step in a pattern of behaviors.

- Knowledge articulation. Communicate information in a more human-friendly way.

These use cases can be applied in different ways for each security domain (threat detection, Zero Trust, etc.) and more broadly outside of security. Framing generative AI applications in buckets of use cases helps organize the value added for each tool, provides a sense of how it’s implemented, and gives an idea of how it uses data inputs.

- Generative AI can dramatically improve analyst experience if approached thoughtfully.

Many implementations of generative AI in security tools today rely on chatbot-esque features built into a separate view in an application. As unique as this is right now, it ultimately does not naturally fit into the analyst workflow and is little more than a novelty.

The real value in generative AI is in addressing tasks automatically that were previously part of the analyst workflow — for example, writing draft incident-response reports. Look for generative AI implementations that fit into the analyst experience to help analysts make decisions faster, not force them to use another view or tab.

- Generative AI will not replace your team.

Generative AI will facilitate the completion of tasks, not the replacement of people. Though much chatter around generative AI has focused on its potential to become sentient, that won’t happen anytime soon — if ever. This is demonstrated by the frequent degradation of generative AI, coherent-nonsense issues, and copyright and intellectual property concerns.

In fact, despite vendor messages, generative AI is much more likely to successfully augment midlevel practitioners and above instead of newbies. Experienced staff will be able to make decisions faster because they know the right decisions to make — unlike new staff, who will be learning from a tool that is potentially incorrect. Treat this like any other technology by augmenting your existing workforce with its capabilities, particularly where it can speed up execution, even if the output is imprecise.

- Generative AI will not replace all artificial intelligence or machine learning.

The use of AI and ML in security tools is not new. Almost every security tool that has been developed over the past 10 years uses ML in some form. For example, adaptive and contextual authentication has been used to build risk-scoring logic, not only on heuristic rules but also on naive Bayesian classification and logistic regression analytics. Unsupervised and supervised ML is frequently used for detection and detection validation. Generative AI augments these capabilities but does not necessarily replicate them.

Given the scope of generative AI — predicting the next step in a web of potential related steps — and current constraints around cost and compute power, generative AI will not and should not replace every instance of ML in existing products.

Forrester clients can schedule a guidance session or inquiry to discuss the use of generative AI in cybersecurity tools and SecOps.

Meet Us At Security & Risk Forum 2023

Want even more information on generative AI and security? Check out the agenda for our upcoming Security & Risk Forum, taking place November 14–15 in Washington, D.C.

Jeff Pollard and I have a keynote, “Adapt And Adopt: Balance The Acute Risk With The Burgeoning Reward Of AI,” where we’ll discuss the benefits of AI and how to manage the risk to take advantage of it. I’m also presenting a track session titled “Transform Your SOC Into A Detection And Response Engineering Practice.” There are also 25 sessions led by Forrester analysts who will be available for one-on-one meetings during the event.