- Best for advanced analytics and AI-driven insights: Databricks Data Intelligence Platform

- Best for AWS ecosystem integrations: AWS Glue

- Best for real-time data processing and Google Cloud integrations: Google Cloud Dataflow

- Best for seamless Azure integrations: Azure Data Factory

- Best for workflow automation across diverse apps and databases: Workato

- Best for complex data integrations across various environments: MuleSoft Anypoint Platform

- Best for extensive connectivity and AI-powered optimizations: Informatica Cloud Data Integration

- Best for predictable pricing: SnapLogic Intelligent Integration Platform

Extract, transform and load (ETL) tools are used to migrate data from disparate sources, preprocess the data and load it into a target system or data warehouse. The process often offers users better querying and analytics, including visualization, and better decision-making capabilities as a result.

In this article, we’ll discuss the best ETL tools and software of 2024 and help you determine which one is the best fit for your business.

What is ETL?

ETL, or extract, transform and load, is a fundamental process in data integration and warehousing. It involves extracting data from various sources, transforming it into a consistent format, and then loading it into a target database or data warehouse for analysis and reporting. ETL plays a crucial role in ensuring data quality, consistency, and accessibility for businesses, enabling them to make informed decisions based on reliable insights derived from disparate data sources.

What are ETL tools?

ETL tools facilitate the extraction of data from multiple sources, such as databases, files, and applications, transforming it according to predefined rules or transformations, and loading it into a target destination, such as a data warehouse or database. ETL tools often provide a graphical interface for designing and managing data workflows, allowing users to easily configure data extraction, transformation, and loading tasks without the need for extensive programming knowledge. With features like scheduling, monitoring, and error handling, ETL tools help organizations efficiently integrate and manage their data.

Top ETL tools and software comparison

To help you decide which ETL tool best suits your needs, we have compiled a comparison table featuring key aspects of each software. The table highlights important features, such as the serverless architecture, connectors, visual mapping, real-time processing and AI recommendations during data transformation.

Below, we have reviewed eight top ETL tools and software options and highlighted their best use cases, key features, pros, cons and pricing.

| Software | Serverless architecture | AI-powered recommendations | Visual mapping | Free trial or credits | Pricing |

|---|---|---|---|---|---|

| Databricks Data Intelligence | Yes | Yes | Yes | Free 14-day trial | Pay-as-you-go |

| AWS Glue | Yes | No | Yes | Free tier for first million accesses/objects | Pay-as-you-go |

| Google Cloud Dataflow | Yes | Yes | Yes | $300 free credits for new customers | Pay-as-you-go |

| Azure Data Factory | Yes | No | Yes | $200 free credit for 30 days | Pay-as-you-go |

| Workato | Yes | No | Yes | Free demo available | Custom price |

| MuleSoft Anypoint Platform | No | Yes | Yes | 30-day free trial | Based on usage and vCores |

| Informatica Cloud Data Integration | Yes | Yes | Yes | 30-day trial | Consumption-based |

| SnapLogic Intelligent Integration | No | Yes | Yes | Custom price |

Databricks Data Intelligence Platform: Best for advanced analytics and AI-driven insights

Databricks Data Intelligence Platform offers users powerful yet user-friendly, fast, efficient and scalable ETL solutions. It’s powered by a data intelligence engine that sits on top of a data lakehouse, which serves as the unified foundation for all data and governance. Thus, it understands the unique qualities of client data.

PREMIUM: Consider hiring a data architect to manage your ETL processes.

A natural language interface makes querying mostly no code and allows users to write code, solve problems and find answers. It also comes with a drag-and-drop interface to help developers build models and a host of collaboration features.

Pricing

Databricks offers a pay-as-you-go pricing model with no upfront costs. Pricing is calculated based on the number of Databricks Units, a normalized unit of processing power on the platform:

- Workflows & Streaming Jobs: Starts at $0.07 per DBU.

- Delta Live Tables: Starts at $0.20 per DBU.

- Databricks SQL: Starting at $0.22 per DBU.

- All Purpose Compute for Interactive Workloads: Starts at $0.40 per DBU.

- Serverless Real-time Inference: Starts at $0.07 per DBU.

Interested buyers should inquire about Databricks’ discounts for usage commitment and 14-day free trial.

Features

- Data intelligence engine: The platform is powered by a data intelligence engine built on a company’s data lakehouse and combined with generative AI, allowing it to understand the unique semantics of the organization’s data.

- Natural language interface: Using a natural language interface makes queries as simple as asking a coworker a question.

- Drag-and-drop interface: Developers can easily drag and drop pieces of code and algorithms when building transformation models.

- Collaboration tools: The platform emphasizes collaboration as a key design factor. This is evident in features such as shared notebooks, collaborative exploration and unified governance.

- Unified interface: Databricks comes with a unified interface and tools for several data tasks, including data processing, generating dashboards and visualizations, scheduling, security, governance, availability and disaster recovery.

Pros

- Flexibility in using any data source and available in multiple languages.

- Highly scalable and reliable.

- Natural language interface makes querying easy.

- Generative AI optimizes and manages infrastructure uniquely for your organization.

- Supports various data types, enabling improved data handling and management.

Cons

- Some users suggest that Databricks could enhance the ease of its deployment, administration and maintenance.

Why did we choose Databricks Data Intelligence Platform?

The Databricks Data Intelligence Platform stands out as one of our top choices for advanced analytics and AI-driven insights due to its core integration with a data intelligence engine and generative AI. The sophisticated generative AI makes data analysis significantly simplified, allowing users to quickly access valuable insights without having to interact with complex code.

Then there is its unparalleled ease of use. The platform’s natural language interface transforms data querying into a straightforward task — like casually asking a colleague. We were also impressed by the drag-and-drop interface that caters exceptionally well to developers and the collaboration tools that promote a cohesive work environment. Feedback from users and experts alike further solidifies Databricks’ position as a leading ETL tool.

AWS Glue: Best for AWS ecosystem integrations

You are probably wondering why AWS chose to use the word “glue” to name this ETL tool. Well, it is metaphoric in the sense of gluing things together — in this case, connecting and integrating disparate data sources.

AWS Glue brings together various data stores like databases and S3 buckets, different data formats and data processing and analysis tools. Glue connotes simplicity and effectiveness, which is exactly what AWS Glue offers users. It seamlessly binds together different elements of a data pipeline, providing a flexible ETL solution that makes it unique compared to other ETL tools.

Pricing

The pricing model is pay-as-you-go and is free for the first million accesses and objects stored, then billed monthly based upon usage thereafter. Below is an example of pricing based on the usage of various services:

- ETL Jobs and Interactive Sessions: Hourly rate, billed by the second, for ETL jobs and interactive sessions. For example, an AWS Glue Apache Spark job that runs for 15 minutes and uses 6 DPUs would incur 1 DPU-Hour at $0.44.

- AWS Glue DataBrew: The cost for each 30-minute interactive session is $1.00.

- Data Catalog: The first million objects stored are free, and the first million accesses are free.

- Crawlers: You pay an hourly rate, billed by the second.

Pricing can also vary based on the AWS server region.

Features

- Serverless: AWS Glue is serverless, thus no need to worry about managing any infrastructure.

- Data Catalog: The Data Catalog is a centralized metadata repository that provides a unified view of your data sources.

- Crawlers: Users can set up crawlers to connect to data sources.

- Continuous ingestion pipelines: It is simple and easy to set up continuous ingestion pipelines for preparing streaming data using the streaming ETL function.

- ETL jobs and interactive sessions: Automatically generates code to perform your ETL after specification of the location or path where the data is stored.

Pros

- Users, including first-time users, have reported it’s easy to use.

- Supports all workloads, including ETL, extract-load-transform, batch, streaming and more.

- Scales on demand to handle any data size.

- Users can discover and connect to over 70 diverse data sources.

Cons

- Natively supports only two programming languages, Python and Scala.

- Limited customization and control.

Why did we choose AWS Glue?

AWS is best for AWS system integrations due to its intrinsic design to merge with AWS services such as S3 buckets and databases. The metaphorical naming isn’t just clever branding but an accurate representation of this ETL tool’s capability to seamlessly connect and integrate various data stores, formats and processing tools within the AWS landscape. The other key factor is its serverless architecture, which removes the burden of managing infrastructure.

User feedback also underscores its simplicity of use, even for first-time users, and its robust support for various workloads, including ETL, ELT, batch and streaming. It is also scalable and handles any type of workload well, and the free pricing tier for the first million accesses and objects stored is definitely an inviting feature, allowing organizations to explore its capabilities without immediate cost.

Google Cloud Dataflow: Best for real-time data processing and Google Cloud integrations

Dataflow, a leading ETL tool based on user reviews, is designed for both stream and batch data management. Its serverless architecture makes it a fast, cost-effective solution that empowers users with real-time insights and machine-learning capabilities.

With $300 in free credits for new customers, Dataflow stands out for its simplicity in operations, automated resource management and the ability to scale resources efficiently. It is well-integrated with other Google services and uses the Apache Beam open-source technology to orchestrate the data pipelines that are used in Dataflow’s ETL operations.

Pricing

Pricing is based on a pay-as-you-go model. The vendor offers $300 in free credits to new customers to try out the service.

Features

- Ready-to-use real-time AI: Dataflow comes with an out-of-the-box machine learning feature that includes NVIDIA GPU and ready-to-use patterns.

- Resource autoscaling: Horizon and vertical auto-scaling to maximize resource utilization.

- Monitoring and observability: Users can observe, diagnose and troubleshoot every step in the Dataflow pipeline.

- Serverless architecture: No need to worry about underlying infrastructure.

- Integration: Deep integration with Google Cloud services.

- Dataflow templates: Users can easily share their pipelines with team members.

Pros

- Dataflow cost-effectively simplifies the data integration process.

- Comes with an intuitive interface that is easy to navigate, making it accessible for users of all skill levels.

- Dataflow can be used in conjunction with other Google Cloud Services to query data from various sources, like AWS and Azure.

Cons

- Some users have reported finding difficulties predicting the cost.

- The reliance on Apache Beam and Google Cloud-specific technologies have the potential to create vendor lock-in.

Why did we choose Google Cloud Dataflow?

Google Cloud Dataflow stands primarily due to its blend of streaming and batch data management capabilities. We also recommend this ETL tool for its serverless architecture, which not only offers a cost-effective and efficient solution but also empowers users with real-time insights and machine learning capabilities. And $300 in free credits for new customers is a great way to try it out without any upfront costs.

Azure Data Factory: Best for seamless Azure integrations

Azure Data Factory is a fully managed, serverless data integration service that allows users to visually integrate data sources. It comes with over 90 built-in connectors. Users can construct ETL processes code-free in an intuitive interface or write their own code. It stands out due to its unique ability to seamlessly integrate with Azure Synapse Analytics, allowing users to parse data and extract business insights.

Pricing

Pricing is based on a pay-as-you-go model, with a $200 free credit for users to use within 30 days.

Features

- Data orchestration: Azure Data Factory handles hybrid ETL, ELT and data integration tasks.

- User-centered design: Azure Data Factory emphasizes usability with designers able to create workflows visually.

- Transformation: Make use of visual data flows or compute services, such as Azure HDInsight and Azure Databricks to transform data.

- 90 built-in connectors: Acquire data from numerous big data sources.

- Intuitive navigation: The interface provides clear pathways for creating pipelines, datasets and activities.

Pros

- Known for its user-friendly interface, it is suitable for both beginners and experts alike.

- Provides data transformation features, allowing you to cleanse and reformat extracted data to the needed target format.

- Capable of scaling processing power up or down based on ETL volume.

- Organizations looking to modernize SQL Server Integration Services will find it easy to move SSIS packages to the cloud.

- Excellent integration capabilities.

Cons

- Has a steep learning curve, especially for users who are new to the Azure ecosystem.

- Some users have found this ETL tool to be expensive, especially for large-scale data operations.

Why did we choose Azure Data Factory?

We selected Azure Data Factory as one of the best ETL tools for 2024, emphasizing its role as a pivotal solution in the data integration landscape. This fully managed, serverless data integration service is designed to facilitate seamless data source integration through a visually intuitive interface or custom code, catering to a wide range of user expertise.

With its offering of over 90 built-in connectors, it simplifies the process of constructing ETL workflows, making it an invaluable asset for businesses seeking to leverage data for insightful analytics.

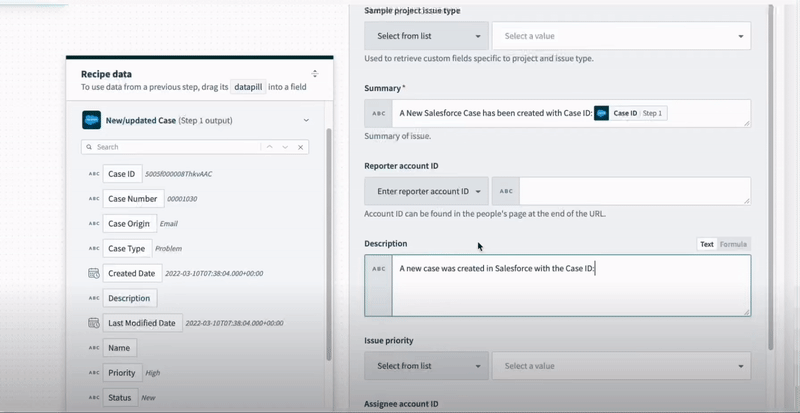

Workato: Best for workflow automation across diverse apps and databases

Workato sets itself apart as an ETL tool with its ETL functionalities to automate data workflows and smoothly transfer data between various applications and databases. Users can extract data by connecting to multiple heterogeneous sources, such as databases, files, APIs, cloud applications, spreadsheets, web services and more using over 1,000 prebuilt connectors.

A wide range of tools is available to transform this data before it is loaded into the target system. It also comes with a reverse ETL feature that allows data to be pushed back to the source systems, which ensures valuable insights make it back to operational systems.

Pricing

Workato does not provide pricing information on its website but offers a custom estimate upon request. However, users can sign up for a free demo to try out the platform.

Features

- Low-code, no-code interface: Workato provides a user-friendly interface that requires low or no coding in some cases, making it accessible to users of all technical skill levels.

- Prebuilt connectors: Comes with over 1,000 pre-built connectors, allowing for seamless integration across various applications.

- Real-time data synchronization: Supports real-time data synchronization, ensuring that your data is always up to date.

- Error handling and monitoring: Includes robust error handling and monitoring features to help maintain the health and performance of integrations.

- Reverse ETL: Data insights can be pushed back into operational systems.

- Drag-and-drop recipe builder: This feature simplifies the process of creating automation workflows.

Pros

- An intuitive and easy-to-use interface.

- Workato utilizes machine learning and patented technology to speed up the creation and implementation of automation.

- Flexible and supports various data sources and destinations.

- Scalable and handles big data sets well.

Cons

- Some users have mentioned that Workato can be expensive, especially considering the number of tasks that can be performed in a day.

- Some level of technical expertise might be required for creating advanced automation.

Why did we choose Workato?

We included Workato as a top ETL tool primarily due to its powerful workflow automation features, which are accessible through its low-code, no-code interface, making it accessible to users of all technical skill levels. This approach, coupled with over 1,000 prebuilt connectors, allows organizations to easily connect to heterogeneous data sources.

The drag-and-drop recipe builder further simplifies the creation of complex automation workflows, enabling both technical and nontechnical users to design and implement data processes without deep coding knowledge.

Real-time data synchronization is another feature we loved because it ensures that data across systems is consistently up to date. Finally, Workato’s innovative reverse ETL functionality allows insights to flow back into operational systems, enhancing the value and applicability of data across the organization.

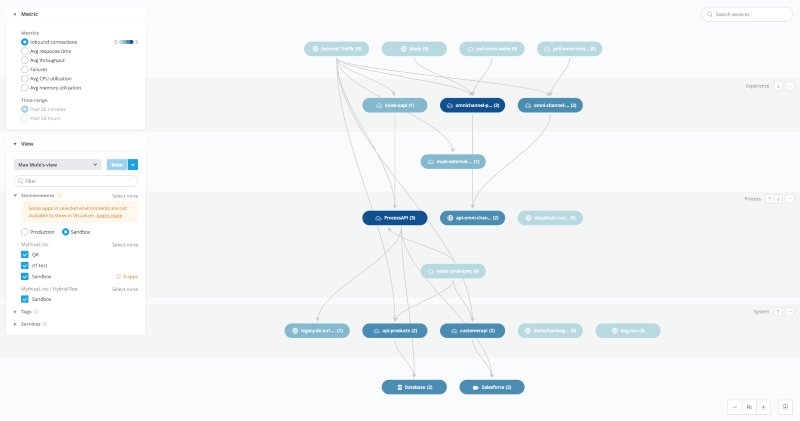

MuleSoft Anypoint Platform: Best for complex data integrations across various environments

MuleSoft Anypoint Platform is a powerful ETL tool with a robust set of features. It has a library of hundreds of connectors to connect various data sources, so you don’t have to rely on complex coding to extract data. MuleSoft’s DataWeave module has a unique feature that makes it stand out compared to other ETL tools.

A key component of the transformation process, the DataWeave module is a user-friendly graphical interface for data mapping, allowing visual drag-and-drop operations to transform data. Transformations support various data formats such as XML, JSON, CSV and flat files which offers users a great deal of flexibility. With MuleSoft, extracted data is temporarily stored in a staging area. Other features include visual data mapping and support for various data formats.

Pricing

Pricing is based on volume usage and vCores — the primary unit of measure for Anypoint Platform costs. A vCore is roughly equivalent to a virtual CPU core. Users can sign up for a 30-day free trial that gives them access to the full suite of Anypoint platform features, including the ETL components and DataWeave.

Features

- DataWeave: Powerful data transformation language with a graphical mapping interface.

- Vast connector library: Hundreds of pre-built connectors for various databases, applications, cloud services and file formats.

- Flexibility: Supports a wide range of data formats, such as XML, JSON and CSV.

- Scalability: Handles large volumes of data and complex transformations.

- Visual mapping: Intuitive drag-and-drop environment for developing ETL processes.

Pros

- A User-friendly interface simplifies ETL development.

- Seamless integration with various data sources.

- Low code environment due to visual tools.

- Backed by a robust enterprise platform.

Cons

- Complex pricing system.

- Some users might find the full platform overwhelming if their needs are basic.

Why did we choose MuleSoft Anypoint Platform?

While the platform pricing system can be complex, we chose it as a top ETL tool for complex data integrations across varied environments due to its comprehensive approach to data connectivity.

With its vast library of connectors and the innovative DataWeave module, MuleSoft integrates and transforms data regardless of format or source. The visual drag-and-drop operation capability not only enhances the transformation process but also supports various data formats, offering good flexibility to users.

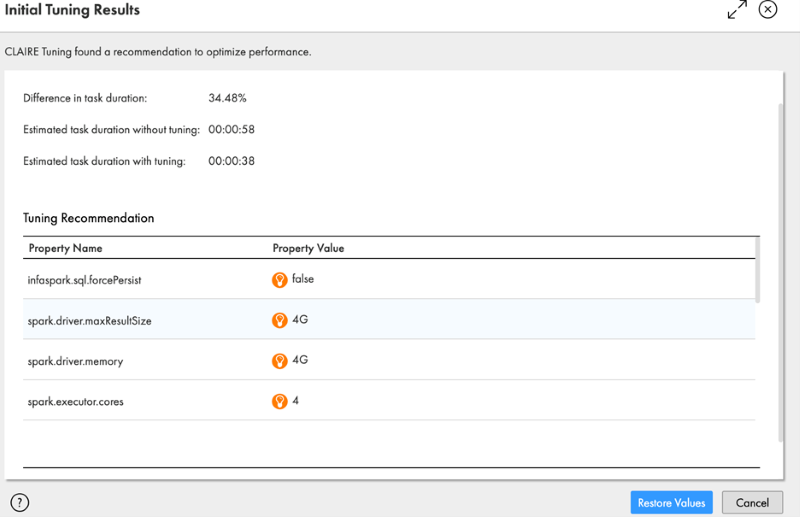

Informatica Cloud Data Integration: Best for extensive connectivity and AI-powered optimizations

Informatica Cloud Data Integration boasts extensive connectivity to an array of data sources, including cloud applications, on-premises applications and databases, big data platforms, flat files, social media and web data. It provides a rich set of transformations to prepare and manipulate data.

The platform also features user-friendly wizards and a drag-and-drop Mapping Designer, simplifying the development of data integration flows for both technical and less technical users. ETL jobs are scheduled at specified intervals or based on triggers. The platform stands out due to its AI-powered recommendation module — known as CLAIRE — which provides intelligent suggestions and recommendations throughout the ETL process, improving efficiency and performance.

Pricing

The vendor uses a consumption-based pricing model. Informatica Processing Units are the fundamental unit of measure. The number of IPUs consumed is based on the compute resources used during data processing tasks. The vendor also offers a 30-day trial that allows potential customers to explore the platform’s capabilities before committing to a subscription.

Features

- Over 400 prebuilt connectors: Connects to a vast array of cloud and on-premises data sources.

- Mapping Designer: User-friendly drag-and-drop visual interface for building data integration mappings.

- Wide range of transformations: Offers built-in support for common and complex data transformations.

- Serverless and cloud-native execution: Flexible processing options based on workload.

- Scheduling and monitoring: Tools for job scheduling, real-time monitoring and troubleshooting.

- AI-powered recommendations (CLAIRE): Provides intelligent suggestions to optimize data flows.

Pros

- Scalable, pay-as-you-go consumption model.

- User-friendly wizards and visual tools.

- Options to optimize execution for different data workloads.

- Extensive ETL requirements out of the box.

- Access to resources, knowledge base and an active user community.

Cons

- Consumption-based pricing can be less predictable for variable workloads.

- Some level of vendor lock-in exists.

Why did we choose Informatica Cloud Data Integration?

Our decision is rooted in Informatica’s AI module CLAIRE and its robust connectivity, featuring over 400 prebuilt connectors for practically any data source. This extensive connectivity and AI-powered optimizations make it best for enterprises looking to harness the full potential of their data. The intelligent suggestions dramatically streamline the ETL process.

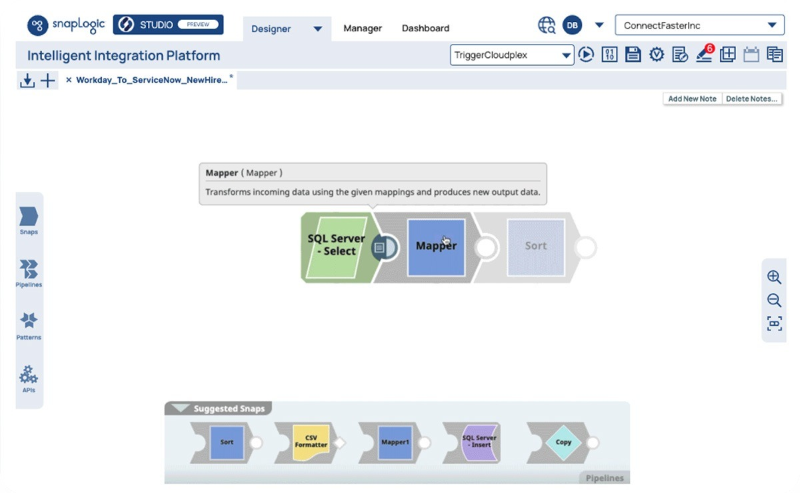

SnapLogic Intelligent Integration Platform: Best for predictable pricing

SnapLogic uses a massive library of over 700 prebuilt connectors known as Snaps to extract data from various sources, such as cloud applications, on-premises databases, file formats, big data platforms and IoT devices and protocols. Once extracted, the data is transformed using a visual drag-and-drop interface.

The platform seamlessly sends data to its final destination, which could be a cloud data warehouse, database, application or analytics platform. Processing can be done either in real time or through traditional batch processing. An AI-powered module known as Iris makes recommendations during transformations. However, the main issue that makes this tool stand out is its predictable pricing model compared to other ETL tools. We discuss pricing more below.

Pricing

SnapLogic emphasizes upfront predictable pricing. Pricing is based on the size and configuration of the package, including the particular Snaps, added to the configuration. Some key aspects of pricing include:

- Unlimited data: Regardless of whether you initially load 1 GB of data or 100 TB of data, the package pricing remains the same. However, extremely high-throughput use cases might lead to customized pricing discussions.

- Core Snaps: These are free of charge, part of the core system and are included with every purchase.

- Premium Snaps: Certain Snap Packs are charged. These are referred to as premium Snaps and are available in two levels — Tier One (+$45K USD) and Tier Two (+$15K USD).

- Configuration-based pricing: Packages are highly configurable and an extra fee is incurred where optional extras are added to a package.

Features

- Extensive Snap library: Prebuilt connectors for a vast array of apps, databases and file formats.

- Flexible deployment options: Cloud-based or on-premises installations for security and agility.

- Iris AI assistance: Smart recommendations for data transformations and pipeline building.

- Reverse ETL: Send transformed data back to your applications to benefit from the insights.

- Visual ETL design: Intuitive interface for building complex data pipelines without extensive coding.

Pros

- User-friendly, low-code or no-code approach.

- Handle large datasets and real-time needs.

- Easily connect to a wide range of systems.

- Robust security, monitoring tools and access controls.

- Upfront predictable pricing.

Cons

- For very simple use cases, it could have a steeper initial learning curve than super-basic tools.

Why did we choose SnapLogic Intelligent Integration Platform?

Our inclusion in this list is chiefly driven by SnapLogic’s upfront, predictable pricing model, which provides businesses with clear, transparent cost expectations right from the outset. Pricing accommodates various needs with tiered pricing for premium Snaps and a generous offering of core Snaps at no additional cost.

How do I choose the best ETL Tool for my business?

While every organization has unique requirements, there are essential features that are universally beneficial:

- A visual interface to help users with transformations will help teams work faster through quicker and more intuitive data transformations.

- A wide range of prebuilt connectors will also help teams extract data from sources, especially if data is stored in many disparate systems.

- AI-powered recommendations are a great thing to have when performing recommendations because they quickly identify data patterns and provide recommendations on how to optimally handle diverse data formats.

- For businesses that need data transformed near-instantly and fed back into source systems, consider an ETL tool with real-time processing and reverse ETL capabilities.

- Serverless architecture goes a long way in ensuring data teams are not worrying about infrastructure management but focusing on core business activities.

Review methodology

To review the best ETL tools of 2024, we conducted extensive research and current market offerings to narrow our list to these eight. We considered factors such as user experience, architecture, AI capabilities, visual mapping, scalability, flexibility and ease of use. We also analyzed pricing information to ensure the chosen software solutions provide value to businesses of all sizes.

Our primary sources were the vendor websites where we obtained product information and documentation. Where available, we signed up for free trials and demos to test the products. For customer sentiment, we reviewed dozens of verified customer reviews at reputable software review sites online.