In a recent report by cloud security firm Wiz, it has come to light that Microsoft's AI research division inadvertently exposed a staggering 38 terabytes of sensitive data due to a misconfiguration involving Shared Access Signature (SAS) tokens.

The incident, which began in July 2020 and remained undetected for almost three years, originated from Microsoft's attempt to share open-source code and AI models for image recognition via a GitHub repository.

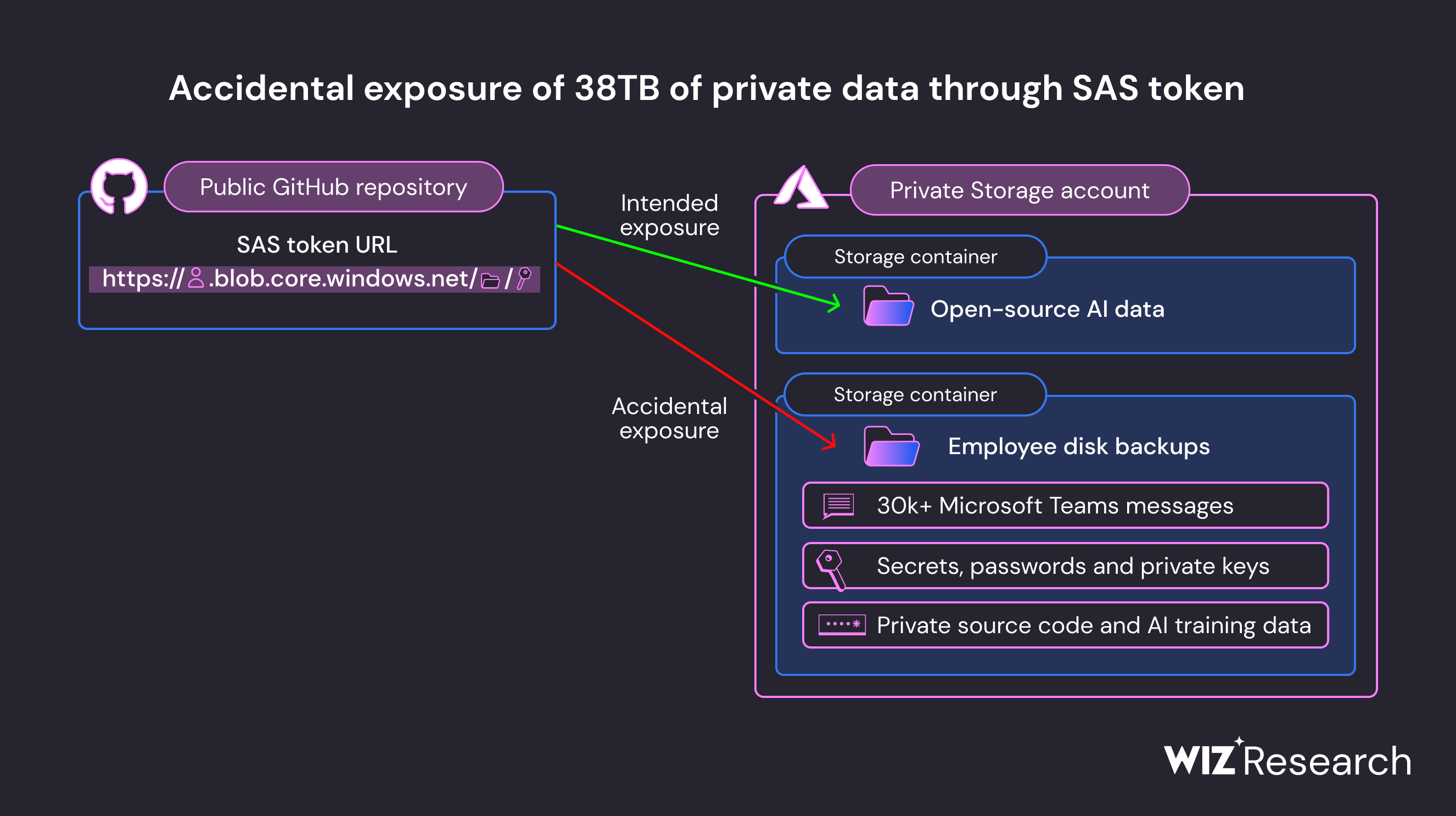

Users were directed to download these models from an Azure Storage URL; however, the misconfigured URL granted unauthorized access to the entire storage account, thus exposing vast amounts of additional private data.

Among the exposed data were personal backups of Microsoft employees' computers, passwords for Microsoft services, secret keys, and more than 30,000 internal Microsoft Teams messages from hundreds of employees.

Wiz security researchers included this graphic in the report to visualize the incident:

Mohit Tiwari, Co-Founder and CEO of Symmetry Systems, discussed with SecureWorld News the broader issues this incident highlights in cloud security and data protection:

"Data is meant to be shared, but sharing data securely on the cloud today is like driving a car with a tiller. Fast and close to the edge of a cliff.

The key takeaway is that organizations have to understand what data you have, who can access it, and how it is being accessed. What Wiz has identified is not a cloud posture problem—this is a data inventory and access problem."

In response to the incident, Microsoft acted swiftly and decisively. After being alerted by Wiz, Microsoft revoked the SAS token, effectively blocking external access to the storage account. Subsequent investigations confirmed that no customer data or other internal services were compromised in the process.

Microsoft also expanded GitHub's secret scanning service to proactively identify risky SAS tokens, aiming to prevent similar incidents.

Patrick Tiquet, Vice President of Security & Architecture at Keeper Security, emphasized the significance of strong security practices in relation to this incident:

"While AI can be a useful tool, organizations need to be aware of the potential risks associated with utilizing tools that leverage this relatively nascent technology.

The recent incident involving the exposure of AI training data through SAS tokens highlights the need to treat AI tools with the same level of caution and security diligence as any other tool or system that may be used to store and process sensitive data. With AI in particular, the amount of sensitive data being used and stored can be extremely large.

In some cases, organizations need assurances from AI providers that sensitive information will be kept isolated to their organization and not be used to cross-train AI products, potentially divulging sensitive information outside of the organization through AI collective knowledge.

The implementation of AI-powered cybersecurity tools requires a comprehensive strategy that also includes supplementary technologies to boost limitations as well as human expertise to provide a layered defense against the evolving threat landscape."

While this accidental exposure did not result in customer data breaches, it does serve as a reminder of the security challenges organizations face when dealing with vast amounts of data, especially in the context of AI development.

Proper management of SAS tokens, adherence to best practices, and robust monitoring are imperative to safeguarding sensitive information in the age of digital transformation.

Microsoft's response emphasizes the significance of promptly addressing incidents and implementing proactive security measures to prevent their recurrence.

Follow SecureWorld News for more stories related to cybersecurity.