In brief: Russia is once again running online campaigns in an attempt to manipulate the presidential elections in the US, albeit at a slower pace than what we saw during previous elections. Microsoft writes that the country is mostly trying to undermine support for Ukraine and NATO among US audiences.

Russia's US election influence campaign has picked up the pace over the last two months, writes Microsoft, but the less contested primary season means it hasn't been as intense as in 2016 and 2020.

Russia's primary focus in its campaign is spreading support for its war in Ukraine through disinformation, reducing support for NATO, and causing infighting within the US, using traditional and social media and a mix of covert and overt campaigns.

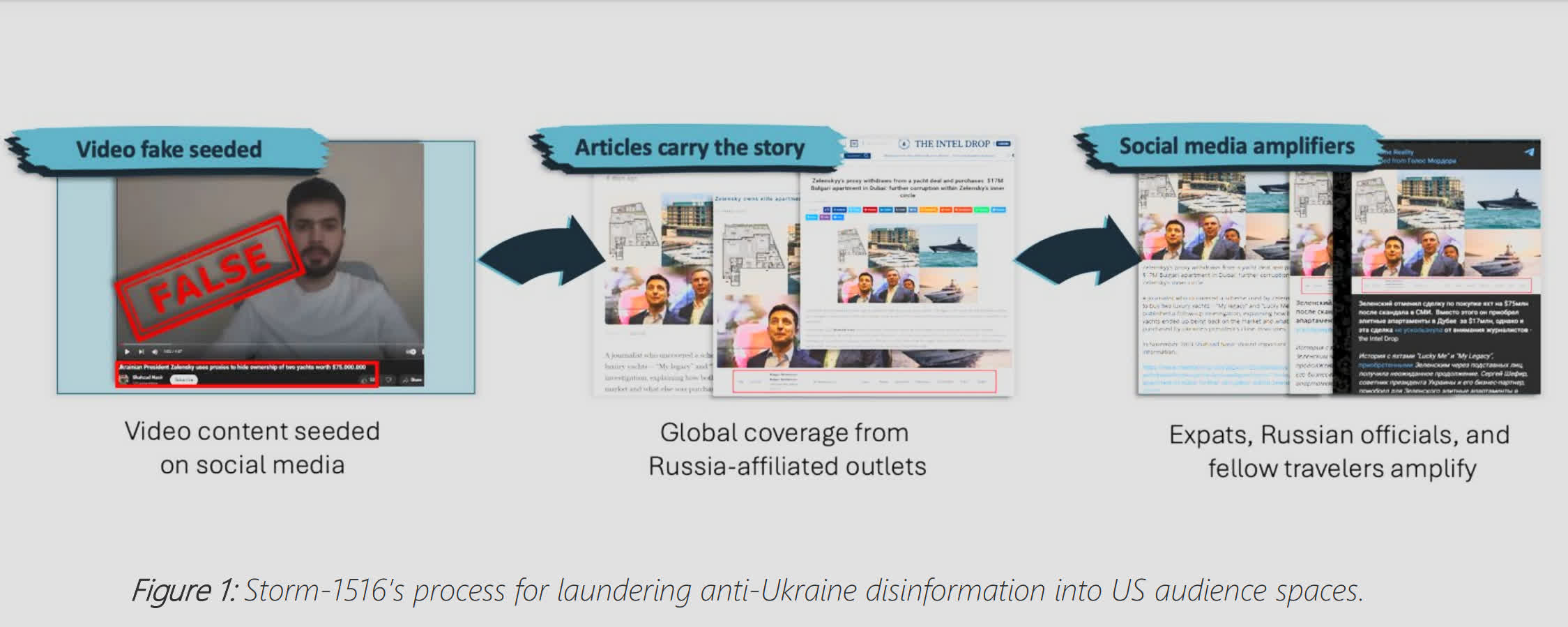

Microsoft writes that Russian-affiliated group Storm-1516 pushes an anti-Ukraine message by creating a video of someone presenting as a whistleblower or citizen journalist spreading a Russian-positive narrative about the war. The video is then covered by a seemingly unaffiliated global network of covertly managed websites, including DC Weekly and The Intel Drop, before being amplified by Russian expats, officials, and fellow travellers. US audiences then repeat and repost the disinformation, likely unaware of its source.

Another group highlighted by Microsoft is Star Blizzard, aka Cold River. It focuses on Western think tanks, and while it still has no connection to the 2024 elections, its focus on US political figures may be the first in a series of hacking campaigns designed to drive Kremlin-favored outcomes heading into November.

There have been a lot of concerns over foreign adversaries using advanced AI to influence US elections. Microsoft said that the widespread use of deepfaked videos has so far not been observed, but AI-enhanced and AI audio fake content is likely to have more success.

Microsoft writes that AI-enhanced content is more influential than fully AI-generated content, AI audio more impactful than AI video, and fake content purporting to come from a private setting such as a phone call is more effective than fake content from a public setting. The company adds that impersonations of lesser-known people work better than impersonations of well-known individuals such as world leaders.

We've already seen examples of faked audio being used to influence people. A 39-second robocall went out to voters in New Hampshire in January telling them not to vote in the Democratic primary election, but to "save their votes" for the November presidential election. The voice handing out this advice was an AI-generated model that sounded almost exactly like Joe Biden. It led to the FCC making AI-generated robocalls illegal and Biden calling for a ban on AI voice impersonations.

It's not just Russia looking to disrupt and influence the US elections using AI. Microsoft warned earlier this month that Chinese campaigns have continued to refine AI-generated or AI-enhanced content, creating videos, memes, and audio, among others, to create social media posts focusing on politically divisive topics.

In February, 20 major companies, including Amazon, Google, Meta, and X, signed an agreement pledging to root out deepfake and generative AI content designed to influence or interfere with citizens' democratic right to vote.