The big picture: Tesla vehicles are no stranger to controversy, with the company's Autopilot system being a major source of negative press. However, a recent report from US regulators renders the scale of Tesla Autopilot failures in hard numbers. The hundreds of crashes and dozens of deaths were primarily the result of drivers misunderstanding what "Autopilot" really means.

A newly published report from the National Highway Traffic Safety Administration (NHTSA) links Tesla's Autopilot systems to nearly 1,000 crashes from the last few years, over two dozen of them fatal. Most were caused by inattentive drivers who may have falsely believed that the company's driver assistance systems amounted to full-blown self-driving.

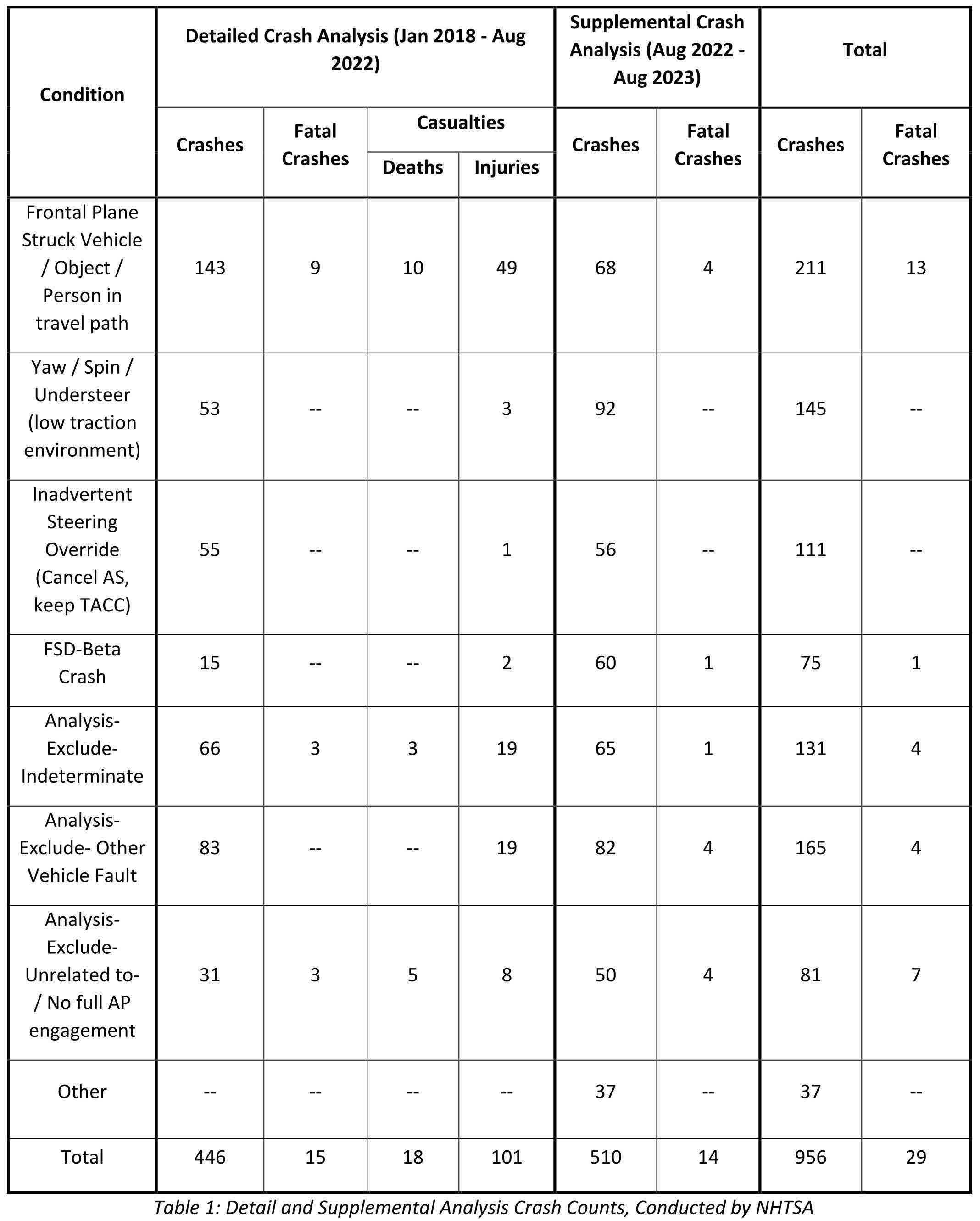

The investigation details 956 crashes between January 2018 and August 2023, resulting in well over 100 injuries and dozens of deaths. In many incidents, a crash occurred several seconds after the vehicle's Autopilot system detected an obstruction that it didn't know how to negotiate, ample time for an attentive driver to avoid an accident or minimize the damage suffered.

In one example from last year, a 2022 Model Y in Autopilot hit a minor stepping out of a school bus in North Carolina at "highway speeds." The victim suffered life-threatening injuries, and an examination revealed that an observant driver should have been able to avoid the accident.

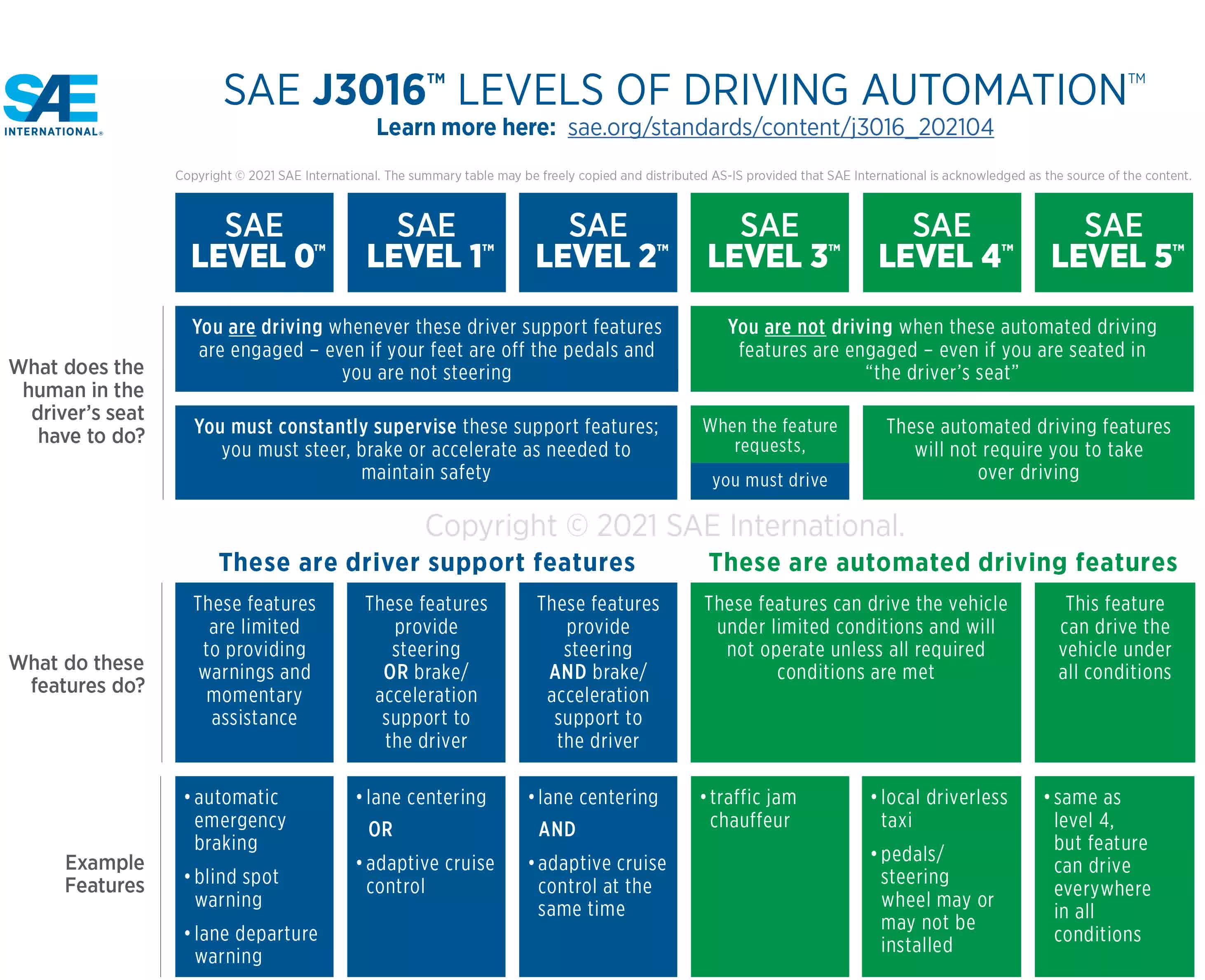

Tesla's Autopilot functionality isn't a fully autonomous driving system. Defined as a Level 2 self-driving system, it simultaneously assists with actions like steering, braking, acceleration, lane centering, and adaptive cruise control. However, it still requires the driver to keep their eyes on the road and both hands on the steering wheel.

Also see: The Six Levels of Self-Driving Systems

A 2022 study revealed that many drivers mistakenly believe that existing driver assistance functions like Tesla's Autopilot make cars fully autonomous. Mercedes-Benz recently became the first company to sell Level 3 vehicles in the US, which can become fully autonomous in limited scenarios. Still, the automaker's self-driving vehicles can only be used on certain California highways during the daytime and must be in clear weather.

Inclement weather and challenging road conditions were behind some of the Tesla incidents in the NHTSA report. In 53 cases, the autosteering function failed when the car lost traction. In a further 55 episodes, drivers inadvertently activated manual override by using the steering wheel and almost immediately crashed because they believed Autopilot was still engaged.

Tesla has addressed prior Autopilot flaws with over-the-air updates, but perhaps it should focus on better communicating the feature's limits to users.