Why it matters: It's no secret that AMD's Epyc server CPUs are selling like hotcakes, to the point where Intel is having to heavily discount Xeon chips to stop existing and potential hyperscale customers from going with Team Red. That said, there's a reason why organizations are increasingly looking at options and in some cases choosing AMD over Intel when it comes to building their data center infrastructure.

Recently, Netflix senior software engineer Drew Gallatin offered some valuable insights into the company's efforts to optimize the hardware and software architecture that makes it possible to stream enormous amounts of video entertainment to over 209 million subscribers. The company had been able to squeeze as much as 200 Gb per second from a single server, but at the same time wanted to take things up a notch.

The results of these efforts were presented at the EuroBSD 2021 conference. Gallatin said that Netflix was able to push content at up to 400 Gb per second using a combination of AMD's 32-core Epyc 7502p (Rome) CPUs, 256 gigabytes of DDR4-3200 memory, 18 2-terabyte Western Digital SN720 NVMe drives, and two PCIe 4.0 x16 Nvidia Mellanox ConnectX-6 Dx network adapters, each capable of accommodating two 100 Gb connections.

To get an idea of the maximum theoretical throughput of this system, there are eight memory channels providing a bandwidth of around 150 gigabytes per second, and 128 PCIe 4.0 lanes allowing for up to 250 gigabytes of I/O bandwidth. In networking units, that's around 1.2 Tb per second and 2 Tb per second, respectively. It's also worth noting that this is what Netflix uses to serve its most popular content.

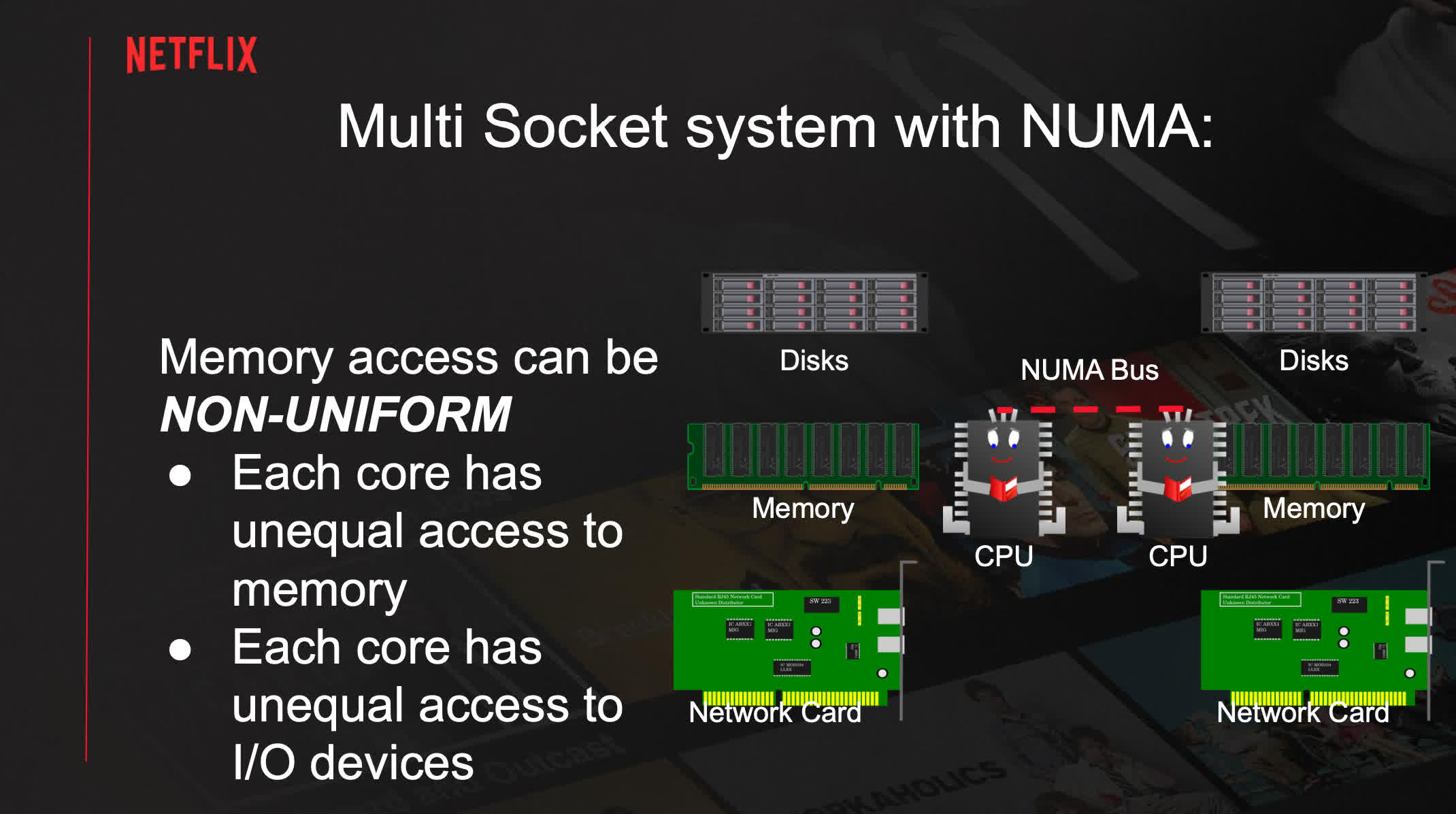

This configuration can normally serve content at up to 240 Gb per second, mainly due to memory bandwidth limitations. Netflix then tried different Non Uniform Memory Architecture (NUMA) configurations, with one NUMA node being capable of 240 Gb per second and four NUMA nodes yielding around 280 Gb per second.

However, this approach comes with a host of problems of its own, such as higher latencies. Ideally, you have to keep as much of the bulk data off the NUMA Infinity Fabric as possible to prevent congestions and CPU stalls as a result of competing with normal memory accesses.

The company also looked at disk siloing and network siloing. This essentially means trying to do everything on the NUMA node where the content is stored or the NUMA node chosen by the LACP partner. However, this further complicates matters when trying to balance the whole system and leads to an underutilized Infinity Fabric.

Gallatin explained that going around these limitations was possible by using software optimizations. By offloading the TLS encryption tasks to the two Mellanox adapters, the company increased the total throughput to 380 Gb per second (up to 400 with additional tweaks), or 190 Gb per second per network interface card (NIC). With the CPU no longer having to perform any encryption, the overall utilization went down to 50 percent with four NUMA nodes and 60 percent without NUMA.

Netflix explored configurations based on other platforms, too, including one with Intel's Xeon Platinum 8352V (Ice Lake) CPU, and Ampere's Altra Q80-30 – a behemoth with 80 Arm Neoverse N1 cores running at up to 3 GHz. The Xeon testbed was able to reach a modest 230 Gb per second without TLS offloading, and the Altra system achieved 320 Gb per second.

Not content with the 400 Gb per second result, the company is already building a new system that should handle 800 Gb per second network connections. However, some of the necessary components didn't arrive in time to conduct any tests, so we'll hear more about it next year.