4 Common Causes of False Positives in Software Security Testing

In a perfect world, your software testing strategy would surface all of the security risks that exist inside your environment, and nothing more.

But we don't live in a perfect world. Sometimes, the security issues that software testing tools flag turn out to be false positives. That means that they're not actually problems, even though the software security testing process identified them as such. False positives create distractions that make it harder for security teams to detect and address actual security risks.

Why do false positives occur in software testing, and what can teams do about them? This article discusses those questions by explaining common causes of false positives and how to mitigate them.

What Are False Positives in Software Security Testing?

In software testing, a false positive is a test result that says a problem exists, when in reality there is no issue.

For example, imagine that you scan a container image for security vulnerabilities. The scanner identifies a vulnerability associated with a dependency that the scanner believes your container image requires. In actuality, however, the image doesn't include the dependency; the scanner just thinks it does because it misread the dependency data. As a result, no vulnerability exists, although your scanner says one does.

False Positives vs. False Negatives

It's worth noting that you may also run into false negatives. A false negative is a security problem that exists but that your security testing tools do not detect. False negatives are arguably even worse than false positives, because security issues that you overlook could be exploited by attackers. It's better to waste time addressing a false positive than it is to suffer a breach that stems from a false negative.

Still, false positives can significantly detract from an organization's ability to respond to security issues quickly and effectively. False positives are problematic because they add to the "noise" that engineers have to sort through when responding to security problems. They increase the total number of security alerts that teams have to manage and make it harder to determine which risks to prioritize. They cause engineers to waste time investigating non-existent security problems, which leaves them with less time to address actual security concerns.

The bottom line is that the more false positives you have, the more inefficient your security operations are likely to be.

Causes of Security False Positives

Now that we know what false positives are and why they're bad for security, let's look at what causes false positives and how to avoid them.

- Outdated Vulnerability Data

Testing an application for vulnerabilities typically involves using testing tools that access a vulnerability database, such as the NVD. The testing tools then determine whether any of the vulnerabilities identified in the database exist within the application.

Vulnerability databases are a great resource, because they centralize data about known vulnerabilities. However, the databases aren't always up to date, and outdated vulnerability data can lead to false positives.

For instance, imagine your security tests flag a vulnerability for which no patches were known when it was first recorded in a vulnerability database. As a result, the database says that all versions of the software that causes the vulnerability are at risk.

Now, further imagine that you manually found a patch for the vulnerability and applied it to your application prior to scanning. You've therefore closed the vulnerability. But if the vulnerability database hasn't yet been updated with the patch information, your testing tools will flag your application as vulnerable, because they don't yet know about the fix.

- Misinterpretation of Configuration Data

Software testing tools usually interpret the configuration data associated with an application to assess risks. For example, they'll look at dependencies that are specified by your application in order to determine whether any of the dependencies are known to be vulnerable.

This approach works well in most cases, but it can cause false positives in situations where the software testing tools don't accurately or fully interpret configuration data.

For example, Debian packages (which are used to install applications on Ubuntu and certain other Linux-based operating systems) can specify "recommended" dependencies as well as required dependencies. But some testing tools might not be sophisticated enough to distinguish between the two types of dependencies. They could generate false positives if they identify recommended dependencies that are vulnerable, even if you are not actually going to install them.

- Inaccurate or Irrelevant Configuration Data

In other cases, your software testing tools might be capable of interpreting configuration data accurately, but if the configuration data that they scan is inaccurate or outdated, it can result in a false positive.

For example, consider an application that a developer builds and tests on a local machine. The local environment is different from the server environment where the application will run in production. This means that security tests that are run on the local machine may flag risks that won't actually apply. The development team can ignore these risks so long as they don't exist in the production environment.

- Low Alerting Thresholds

Some software security testing tools allow you to configure which types and levels of alerts they should generate. If you configure them to be too sensitive, you may end up with false positives, because they'll flag risks that are either non-existent or so minor as to be irrelevant.

Of course, you don't want to end up with false negatives because you set your alerting threshold too high, so it's important to find a healthy middle ground that allows your tests to detect all risks without generating an excessive number of false positives.

Conclusion: Conquering False Positives

It's worth keeping in mind that you'll almost always face some amount of false positives if you test software with any degree of frequency.

But if your software tests generate so many false positives that they slow down your team and distract them from addressing the real risks, you should take steps to reduce false positives.

Ensure that your vulnerability data is up-to-date and that your application configuration data accurately reflects the conditions that will exist in production. In addition, make sure to configure your security software testing tools properly so that they can catch all of the relevant risks while generating as few false positives as possible.

Mayhem: A Security Testing Solution With Zero False Positives

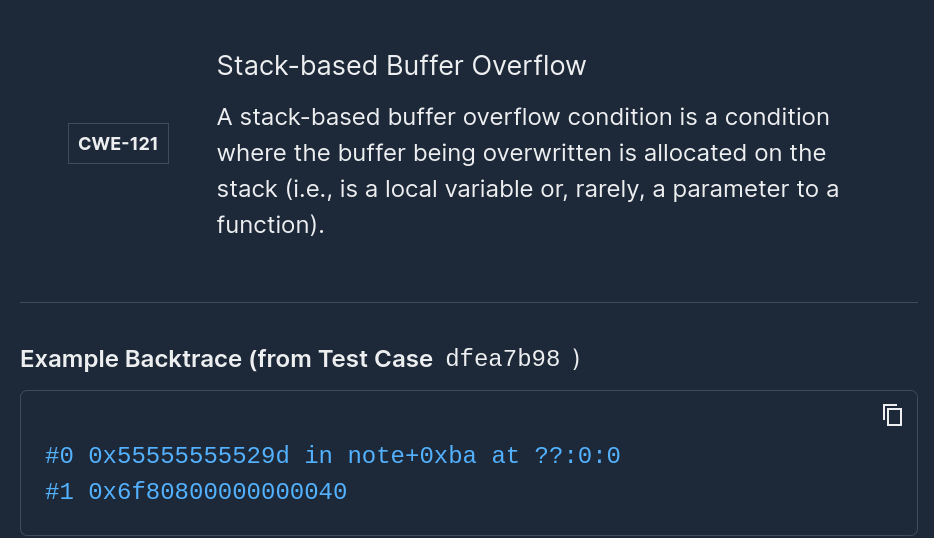

Tired of false positives and spending endless hours on reproductions and regression tests? With Mayhem, our developer-first security testing solution, you can finally say goodbye to false positives and hello to confirmed risks that require immediate attention. Our automated testing generates thousands of tests to identify defects in your apps and APIs, ensuring that all reported vulnerabilities are exploitable and confirmed risks.

Mayhem delivers triaged results with reproductions, backtraces, and regression tests, streamlining issue diagnosis and fixes. With Mayhem, you can rest easy knowing your software is secure and your team's time is being spent on what really matters—creating great software.

Author Bio

Chris Tozzi has worked as a Linux systems administrator and freelance writer with more than ten years of experience covering the tech industry, especially open source, DevOps, cloud native and security. He also teaches courses on the history and culture of technology at a major university in upstate New York.

Add Mayhem to Your DevSecOps for Free.

Get a full-featured 30 day free trial.

.jpg)